Annotation

- Introduction

- Understanding n8n's Automation Framework

- The 80/20 Principle in Workflow Automation

- Essential n8n Workflow Conventions

- The 13 Core Nodes for n8n Mastery

- Practical Implementation Strategies

- Pros and Cons

- Conclusion

- Frequently Asked Questions

Master n8n Automation: 13 Essential Nodes for 80/20 Workflow Success

Master n8n workflow automation with the 80/20 principle by focusing on 13 core nodes for triggers, data processing, API integration, and AI, enabling

Introduction

In today's competitive digital environment, workflow automation has become essential for businesses seeking efficiency and scalability. n8n emerges as a powerful open-source automation platform that offers unparalleled flexibility compared to proprietary alternatives. However, many users struggle with the platform's extensive feature set. By applying the 80/20 principle – focusing on the critical 20% of features that deliver 80% of results – you can rapidly master n8n and build sophisticated automations without getting overwhelmed by unnecessary complexity.

Understanding n8n's Automation Framework

The 80/20 Principle in Workflow Automation

The Pareto principle, commonly known as the 80/20 rule, proves particularly relevant when learning complex platforms like n8n. Rather than attempting to master every available node and integration, strategic focus on core components accelerates proficiency dramatically. This approach prevents the common pitfall of "tutorial hell," where users consume endless educational content without producing tangible automation results. The fundamental nodes discussed here represent that crucial 20% – mastering them enables you to handle the majority of real-world automation scenarios effectively.

When exploring workflow automation tools, n8n's open-source nature provides distinct advantages for technical teams. The platform's modular architecture allows for extensive customization, while self-hosting options ensure data sovereignty and security compliance. By concentrating on essential nodes first, you establish a solid foundation before advancing to more specialized functionality.

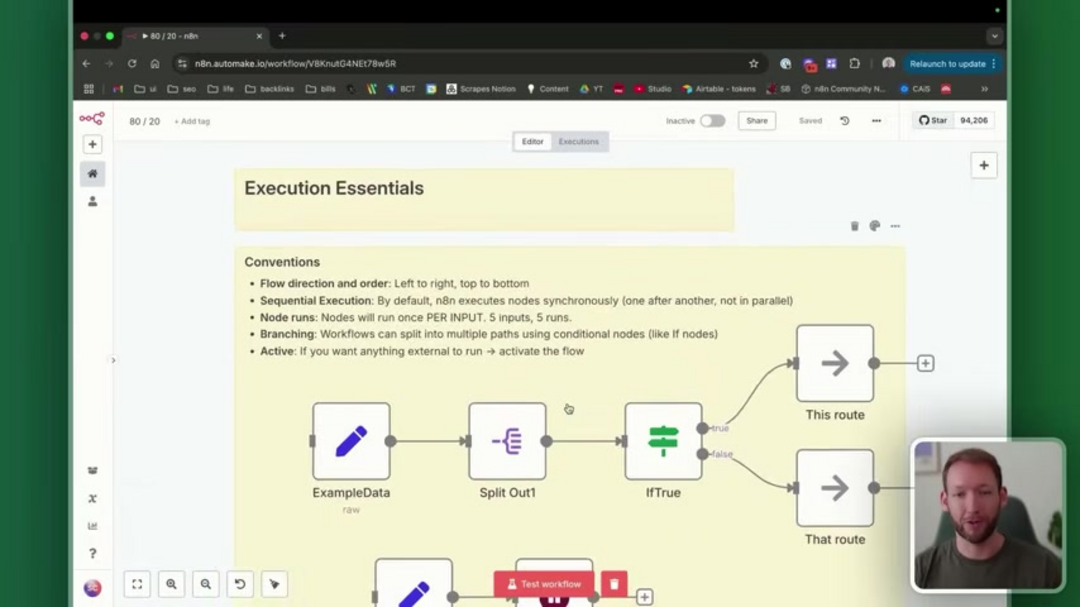

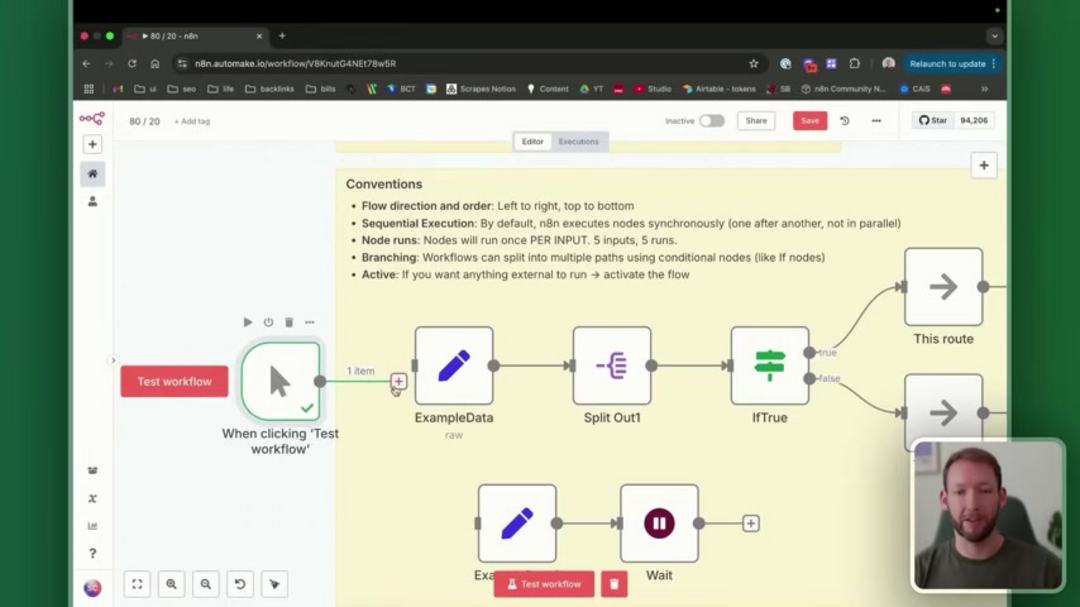

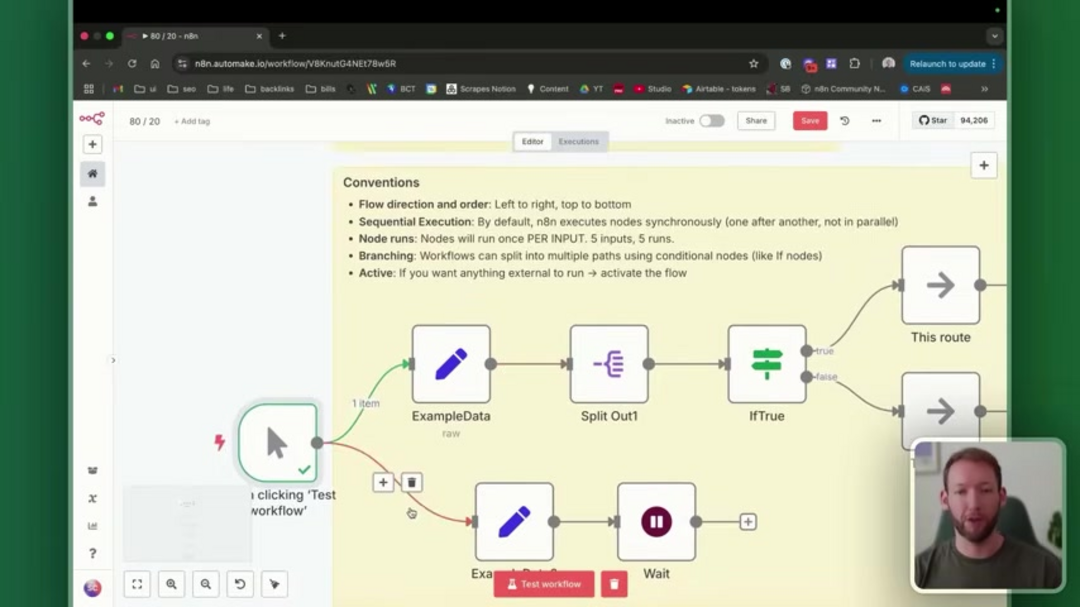

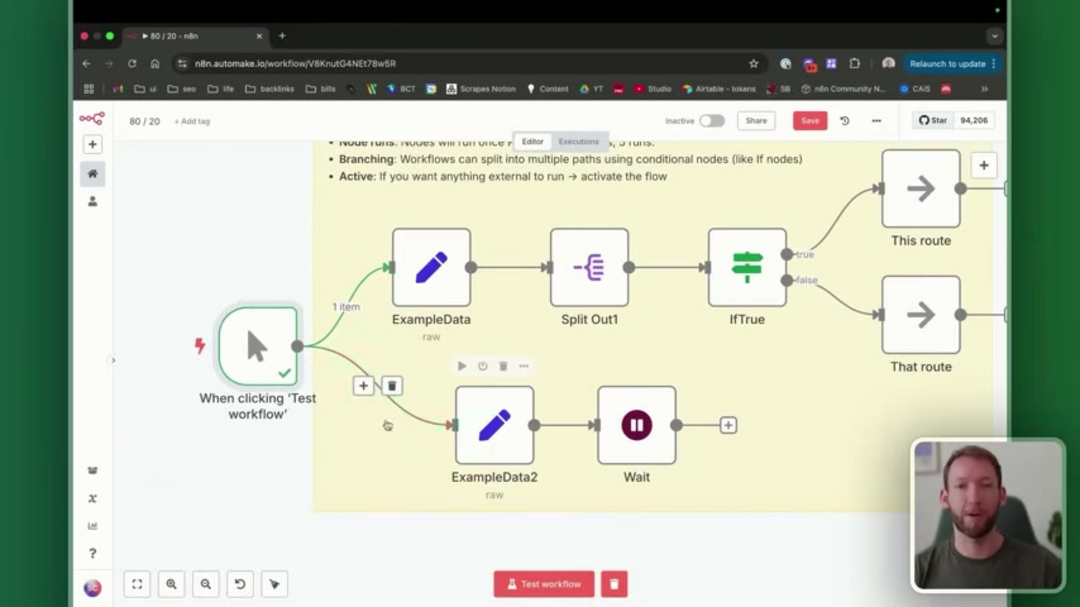

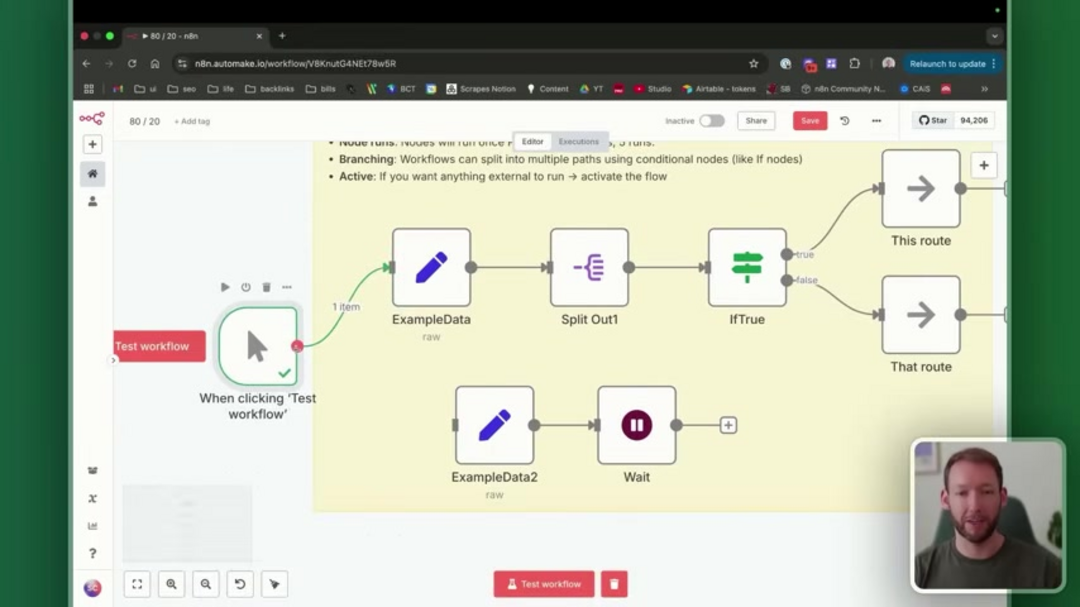

Essential n8n Workflow Conventions

Before diving into specific nodes, understanding n8n's execution model is crucial for designing reliable automations. The platform follows predictable patterns that, when mastered, prevent common debugging frustrations.

Key execution principles include sequential processing from left to right and top to bottom, with each node completing before the next begins. This synchronous execution ensures data integrity throughout complex transformations. Branching enables conditional logic paths, while node activation controls prevent unintended workflow triggers. Understanding these fundamentals separates successful automation architects from those who struggle with unpredictable results.

The 13 Core Nodes for n8n Mastery

Trigger Nodes: Automation Starting Points

Triggers initiate your workflows based on specific events or conditions. Three primary trigger types cover most automation needs:

- Manual Trigger: Perfect for testing and development, this node allows immediate workflow execution without external events. It's invaluable for debugging complex data transformations before connecting to live systems.

- Schedule Trigger: Automate time-based processes like daily reporting, weekly backups, or monthly analytics. This node supports cron expressions for precise scheduling flexibility.

- App Event Trigger: Connect to external services like Slack, Google Sheets, or CRM platforms to initiate workflows based on real-time events such as new form submissions or database changes.

Data Processing: The Automation Engine

Once triggered, data manipulation becomes central to effective automation. n8n provides robust tools for transforming information between systems.

Essential data processing nodes include:

- Split Out Node: Converts nested arrays into individual items for parallel processing, essential for handling batch data from APIs or databases.

- Aggregate Node: Recombines individual items into structured datasets, perfect for preparing data for bulk operations or consolidated reporting.

- Set/Edit Fields Node: The Swiss Army knife of data manipulation – create, modify, or remove fields using expressions, calculations, or direct mappings.

- IF Node: Implements conditional logic to route workflows along different paths based on data values or external conditions.

- Code Node: For advanced scenarios requiring custom JavaScript, this node provides unlimited transformation capabilities when built-in nodes fall short.

External Connectivity and API Integration

n8n's true power emerges when connecting disparate systems through standardized protocols. Key connectivity nodes include:

- HTTP Request Node: The cornerstone of external integration – send GET, POST, PUT, or DELETE requests to any REST API with full authentication support.

- Webhook Response Node: Complete the communication loop by sending structured responses back to calling services, enabling true bidirectional integration.

When working with API client tools, n8n's HTTP capabilities provide exceptional flexibility compared to pre-built connectors. The platform supports various authentication methods including OAuth2, API keys, and custom headers, making it suitable for enterprise-grade integrations.

Data Storage and Persistence Solutions

Many automations require temporary or permanent data storage between executions. n8n integrates seamlessly with popular storage platforms:

- Google Sheets Node: Ideal for lightweight data logging, simple reporting, or collaborative datasets that multiple team members need to access.

- Database Nodes: Connect directly to SQL databases, Airtable bases, or Notion databases for robust data persistence and complex query operations.

These storage solutions work particularly well when combined with data processing tools for cleaning and transforming information before persistence. Proper data management ensures your automations remain efficient and scalable as workflow complexity increases.

AI Integration for Intelligent Automation

n8n's AI capabilities bring sophisticated decision-making to automated workflows without requiring extensive machine learning expertise:

- LLM Node (Basic LLM Chain): Integrate large language models for content generation, classification, sentiment analysis, or complex pattern recognition beyond traditional rule-based logic.

- AI Agent Node: For advanced scenarios requiring tool usage, memory persistence, or multi-step reasoning, this node provides the framework for autonomous AI-assisted workflows.

When exploring AI integration tools, n8n's approach balances accessibility with power. The platform supports major AI providers while maintaining the data privacy benefits of self-hosted automation.

Practical Implementation Strategies

Optimizing Data Transformations

The Edit Fields node deserves special attention for its versatility in data manipulation. Beyond basic field operations, it excels at complex transformations like combining multiple data sources, applying conditional formatting, or implementing data validation rules. For instance, you can merge customer information from CRM and support systems into unified profiles, or normalize product data from multiple e-commerce platforms into standardized catalogs.

Mastering API Interactions

The HTTP Request node becomes significantly more powerful when combined with proper error handling and data parsing. Implement retry logic for transient API failures, cache frequently accessed data to reduce rate limit issues, and use expressive data mapping to transform API responses into usable formats. These practices become essential when working with cloud storage platforms or external SaaS applications that may experience occasional availability issues.

Efficient Data Storage Practices

Whether using Google Sheets or database integrations, consistent data structure management prevents common automation failures. Establish clear schema definitions, implement data validation at insertion points, and use batch operations for improved performance. For database management scenarios, consider indexing strategies and query optimization to maintain responsiveness as dataset sizes grow.

AI Workflow Best Practices

Successful AI integration requires thoughtful prompt engineering and output handling. Structure prompts to generate consistent, parseable responses, implement fallback logic for AI service outages, and consider cost optimization when working with paid AI APIs. The structured output parser proves invaluable for transforming AI-generated content into reliable automation data.

Pros and Cons

Advantages

- Open-source platform with complete customization control

- Self-hosting options for enhanced data privacy and security

- Flexible pricing including generous free tier availability

- Extensive node library covering most integration scenarios

- Powerful workflow design capabilities for complex automation

- Active community support and regular platform updates

- Strong API connectivity with authentication flexibility

Disadvantages

- Steeper learning curve than simplified automation tools

- Technical knowledge required for advanced customizations

- Self-hosting demands infrastructure management overhead

- Documentation sometimes assumes technical background

- Limited pre-built templates compared to commercial alternatives

Conclusion

Mastering n8n through the 80/20 approach provides a strategic path to workflow automation proficiency. By focusing on these 13 core nodes, you can build sophisticated automations that streamline operations, reduce manual effort, and create significant business value. The platform's open-source nature and self-hosting capabilities make it particularly attractive for organizations with specific security requirements or customization needs. As you progress from basic triggers to advanced AI integration, remember that successful automation combines technical capability with thoughtful design – start simple, test thoroughly, and scale deliberately. Whether you're automating marketing workflows, data processing pipelines, or customer service operations, n8n's flexible architecture adapts to your unique requirements while maintaining the power and control essential for enterprise-grade automation solutions.

Frequently Asked Questions

What is n8n and how does it work?

n8n is an open-source workflow automation platform that connects apps and services using a visual interface. It uses nodes to represent automation steps and executes workflows from left to right with data passing between connected nodes.

How does n8n compare to Zapier or Make?

n8n offers open-source flexibility and self-hosting options unlike proprietary tools. It provides greater customization control and data privacy, though it has a steeper learning curve than simplified alternatives like Zapier.

Can I use n8n for free?

Yes, n8n offers a generous free tier with core functionality. Paid plans provide additional execution limits and enterprise features, but the free version supports most personal and small business automation needs.

What are the most important n8n nodes to learn first?

Start with trigger nodes (manual, schedule, webhook), data processing nodes (set/edit fields, IF, code), and connectivity nodes (HTTP request). These cover 80% of common automation scenarios and provide a solid foundation.

What are the system requirements for running n8n?

n8n requires Node.js for self-hosting, with minimal resources for small workflows. Cloud version has no specific requirements, and it can be deployed on various platforms including Docker.