Annotation

- Introduction

- History and Fundamentals of AI

- AI Workflow Stages

- AI Development Tools

- Pros and Cons

- Conclusion

- Frequently Asked Questions

AI Workflow Guide: Data Preparation to Model Deployment Strategies

A complete guide to AI workflows covering data preparation, model training, optimization, and deployment strategies using tools like PyTorch,

Introduction

Artificial intelligence has transformed from theoretical concept to practical tool across industries. Understanding AI workflows – the systematic processes that guide AI development from raw data to production deployment – is essential for building effective AI solutions. This comprehensive guide explores each stage of the AI lifecycle, providing insights into tools, techniques, and best practices that ensure successful implementation of machine learning and deep learning projects.

History and Fundamentals of AI

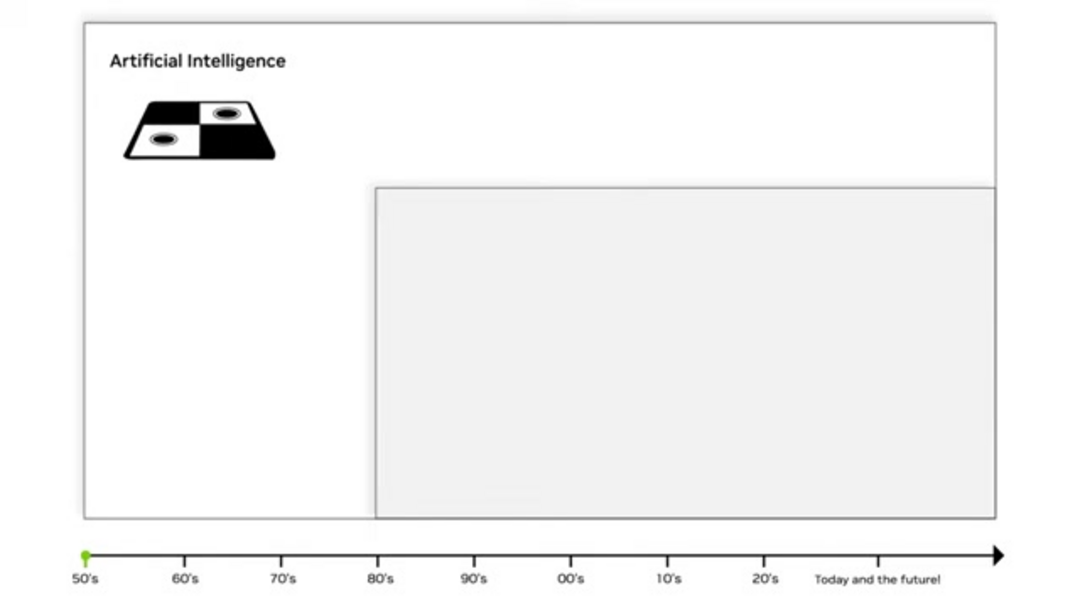

Artificial Intelligence represents the broad field of computer science focused on creating systems capable of performing tasks that typically require human intelligence. This encompasses learning, reasoning, problem-solving, perception, and language understanding. The journey began in the 1950s with rule-based systems that followed explicit programming instructions, but modern AI has evolved significantly beyond these early limitations.

The progression from symbolic AI to today's advanced systems represents decades of research and technological advancement. Early AI systems struggled with real-world complexity, but the emergence of machine learning marked a pivotal shift toward data-driven approaches that could adapt and improve over time. Today's AI landscape includes specialized AI automation platforms that streamline development processes.

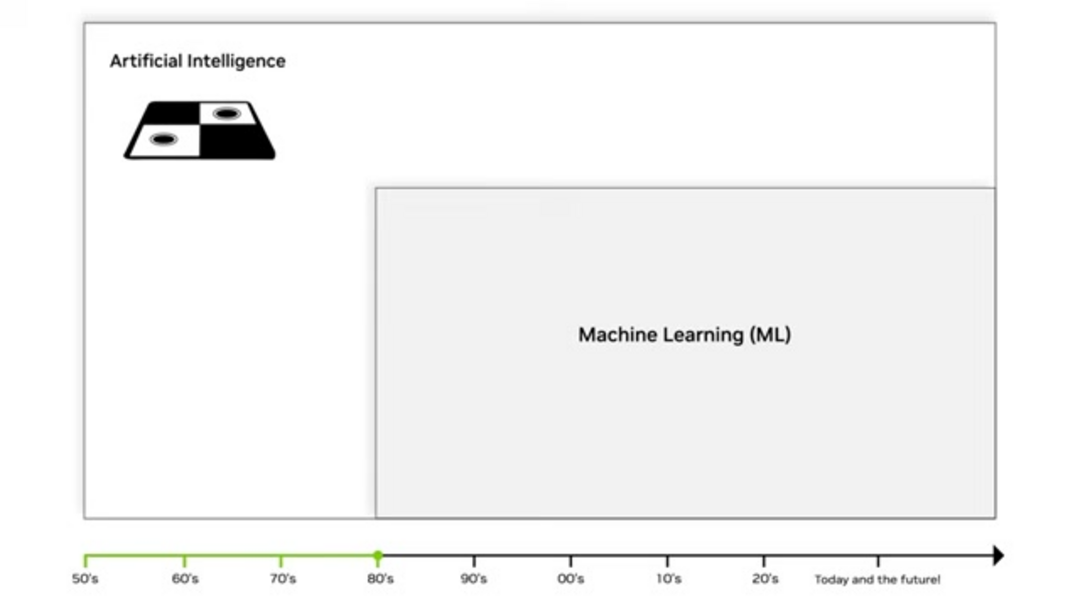

Machine Learning: The Statistical Revolution

Machine Learning emerged in the 1980s as a transformative approach that uses statistical methods to enable computers to learn from data without explicit programming. Unlike traditional software that follows fixed rules, ML algorithms identify patterns and relationships within data to make predictions or decisions. This statistical foundation allows models to improve their performance as they process more information.

Key ML techniques include linear regression for predicting continuous values and classification algorithms like decision trees for categorical outcomes. The "bag of words" approach revolutionized text analysis by treating documents as unordered word collections, enabling applications like spam filtering and sentiment analysis. These methods form the backbone of many AI writing tools and content generation systems.

Deep Learning and Neural Networks

Deep Learning represents a significant advancement within machine learning, utilizing multi-layered neural networks to automatically learn features from raw data. The "deep" in deep learning refers to the multiple layers through which data is transformed, enabling the system to learn increasingly abstract representations. This approach eliminates the need for manual feature engineering, which was a major bottleneck in traditional machine learning.

Three key factors drove the deep learning revolution: advanced GPU hardware that accelerated computation, massive datasets from digital sources, and improved training algorithms. Deep neural networks excel at complex tasks like image recognition, natural language processing, and speech recognition, powering modern AI chatbots and virtual assistants.

Generative AI and Modern Applications

Generative AI represents the current frontier, enabling systems to create original content rather than simply analyzing or classifying existing data. Large Language Models (LLMs) like GPT-4 demonstrate remarkable capabilities in generating human-like text, while diffusion models produce high-quality images from text descriptions. This generative capability opens new possibilities for creative applications and content production.

Modern generative AI applications span multiple domains, including automated content creation, personalized marketing, code generation, and artistic expression. These systems learn the underlying patterns and structures of their training data, then generate new examples that follow similar patterns. The rise of AI image generators demonstrates the practical impact of these technologies.

AI Workflow Stages

An AI workflow provides a structured framework for developing, deploying, and maintaining artificial intelligence systems. This systematic approach ensures consistency, reproducibility, and efficiency throughout the project lifecycle. A well-defined workflow typically includes data preparation, model development, optimization, deployment, and monitoring stages, each with specific tasks and deliverables.

Following a standardized workflow helps teams manage complexity, track progress, and maintain quality throughout the development process. This structured approach is particularly important when working with complex AI model hosting environments and deployment pipelines.

Data Preparation: Foundation for Success

Data preparation transforms raw, unstructured data into a clean, organized format suitable for model training. This critical phase typically consumes 60-80% of the total project time but directly impacts model performance. The principle "garbage in, garbage out" emphasizes that even sophisticated algorithms cannot compensate for poor-quality data.

Key data preparation steps include collecting diverse data sources, handling missing values through imputation techniques, identifying and addressing outliers, standardizing formats across datasets, and removing duplicate records. Feature engineering creates new variables from existing data, while normalization ensures consistent scaling. Proper data preparation lays the groundwork for effective AI APIs and SDKs integration.

Model Training and Algorithm Selection

Model training involves teaching algorithms to recognize patterns and relationships within prepared datasets. This process uses mathematical optimization to adjust model parameters, minimizing the difference between predictions and actual outcomes. The choice of algorithm depends on the problem type, data characteristics, and performance requirements.

Supervised learning uses labeled examples to train classification and regression models, while unsupervised learning identifies patterns in unlabeled data through clustering and dimensionality reduction. Reinforcement learning trains agents through trial-and-error interactions with environments. Each approach requires different training strategies and evaluation metrics to ensure robust performance.

Model Optimization Techniques

Model optimization fine-tunes trained models to improve performance, efficiency, and suitability for deployment. This iterative process addresses issues like overfitting, where models perform well on training data but poorly on new examples. Optimization balances model complexity with generalization capability to achieve the best practical results.

Common optimization techniques include hyperparameter tuning to find optimal learning rates and network architectures, model pruning to remove unnecessary parameters, quantization to reduce precision for faster inference, and knowledge distillation to transfer learning from large models to smaller, more efficient versions. These techniques are essential for preparing models for production environments and AI agents and assistants applications.

Deployment and Inference Strategies

Deployment moves trained models from development environments to production systems where they can generate predictions on new data. This phase requires careful consideration of inference latency (response time), throughput (requests processed per second), and scalability (handling increased load). Successful deployment ensures models deliver value in real-world applications.

Deployment strategies range from simple REST APIs to complex microservices architectures with automatic scaling. Monitoring systems track model performance, data drift, and concept drift to maintain accuracy over time. Continuous integration and deployment pipelines automate updates and ensure consistency across environments.

AI Development Tools

Essential tools streamline AI development, from data processing to deployment. Key platforms include RAPIDS for accelerated data science, PyTorch and TensorFlow for deep learning, and NVIDIA solutions for optimized inference.

RAPIDS for Accelerated Data Processing

RAPIDS provides GPU-accelerated data science libraries that significantly speed up data preparation and analysis. Built on Apache Arrow, RAPIDS offers familiar Python interfaces while leveraging parallel processing capabilities of modern GPUs. The cuDF library provides pandas-like functionality for data manipulation, while cuML accelerates machine learning algorithms.

PyTorch and TensorFlow Frameworks

PyTorch and TensorFlow dominate the deep learning landscape with complementary strengths. PyTorch emphasizes flexibility and intuitive debugging through dynamic computation graphs, making it popular for research and prototyping. TensorFlow offers production-ready deployment capabilities with robust tooling and extensive community support.

NVIDIA TensorRT for Inference Optimization

TensorRT optimizes trained models for high-performance inference on NVIDIA hardware. Through techniques like layer fusion, precision calibration, and kernel auto-tuning, TensorRT can achieve significant speed improvements without sacrificing accuracy. The platform supports models from multiple frameworks through ONNX interoperability.

NVIDIA Triton Inference Server

Triton Inference Server provides a unified platform for deploying models from multiple frameworks simultaneously. Its flexible architecture supports diverse model types, batch processing configurations, and ensemble models. Triton simplifies deployment complexity while maximizing hardware utilization through intelligent scheduling and concurrent execution.

Pros and Cons

Advantages

- Automates complex decision-making processes efficiently

- Improves accuracy and consistency over human operators

- Scales to handle massive datasets and computation requirements

- Provides data-driven insights and predictive capabilities

- Enables personalized experiences and recommendations

- Reduces operational costs through automation

- Accelerates innovation and product development cycles

Disadvantages

- Requires significant computational resources and infrastructure

- Dependent on high-quality, representative training data

- Complex to implement, maintain, and update properly

- Potential for biased outcomes based on training data

- Black box nature can make decisions difficult to interpret

Conclusion

Mastering AI workflows provides the foundation for successful artificial intelligence implementation across industries. From careful data preparation through optimized deployment, each stage contributes to building reliable, effective AI systems. The evolving tool ecosystem – including RAPIDS, PyTorch, TensorFlow, TensorRT, and Triton – continues to lower barriers while increasing capabilities. As AI technologies advance, understanding these workflows becomes increasingly essential for organizations seeking to leverage artificial intelligence for competitive advantage and innovation.

Frequently Asked Questions

What is the most critical step in AI workflows?

Data preparation is often considered the most critical step because model performance directly depends on data quality, completeness, and relevance. Poor data leads to unreliable models regardless of algorithm sophistication.

How does RAPIDS improve AI workflows?

RAPIDS accelerates data preparation and processing using GPU parallelism, reducing processing time from hours to minutes while maintaining familiar Python interfaces for data scientists.

What is the difference between PyTorch and TensorFlow?

PyTorch uses dynamic computation graphs for flexibility and debugging, while TensorFlow emphasizes production deployment with static graphs and extensive tooling. Both are powerful frameworks with different strengths.

Why is model optimization important?

Optimization improves model efficiency, reduces computational requirements, and enhances inference speed while maintaining accuracy – crucial for production deployment and user experience.

What does Triton Inference Server provide?

Triton provides unified deployment for multiple framework models, supporting concurrent execution, dynamic batching, and ensemble models while maximizing hardware utilization through intelligent scheduling.