Annotation

- Introduction

- The Hidden Environmental Cost of Modern AI

- Unsustainable Resource Consumption

- Quadratic Scaling: The Architectural Bottleneck

- Learning from Nature's Master Computer

- The Human Brain's Efficiency Blueprint

- Pseudo-Spiking: Bridging Biology and Technology

- Advanced Spiking Coding Techniques

- SpikingBrain: Practical Efficiency Demonstration

- Economic and Accessibility Implications

- Pros and Cons

- Broad Applications Across Industries

- Conclusion

Brain-Inspired AI: Sustainable Computing Revolution for Energy Efficiency

Brain-inspired AI models emulate human brain efficiency to reduce energy use by up to 97% with spiking neural networks, enabling sustainable and

Introduction

Artificial intelligence has achieved remarkable capabilities, from creative content generation to complex problem-solving, yet these advances come with an unsustainable environmental cost. Current AI systems consume staggering amounts of energy, threatening to stall technological progress. This exploration reveals how brain-inspired computing offers a sustainable path forward, drawing from nature's most efficient computational model to create AI that's both powerful and environmentally responsible.

The Hidden Environmental Cost of Modern AI

Unsustainable Resource Consumption

While AI models demonstrate impressive capabilities in coding, artistic creation, and sophisticated dialogue, these achievements mask a critical sustainability crisis. The transformer architecture, which enabled the current AI revolution, contains fundamental design flaws that make it exceptionally resource-intensive. Training large language models like GPT-3 consumes energy equivalent to multiple households' annual usage, raising serious environmental concerns about continuous model scaling.

The resource demands extend beyond energy to include computational power, cooling requirements, and specialized hardware. As models grow larger and more complex, these requirements escalate exponentially, creating barriers to entry for smaller organizations and researchers. This concentration of AI capability threatens innovation diversity and accessibility across the technology landscape, making efficient AI automation platforms increasingly valuable.

Quadratic Scaling: The Architectural Bottleneck

The transformer architecture's fundamental limitation lies in quadratic scaling, where computational costs increase quadratically with input sequence length. When processing a document, doubling its length quadruples the computational requirements rather than simply doubling them. This exponential cost growth creates significant barriers for applications requiring long-context understanding, such as legal document analysis, medical record processing, or literary analysis.

The technical explanation involves the attention mechanism's requirement to compare every word with every other word in a sequence. As sequence length increases, the number of comparisons grows at an unsustainable rate. This architectural constraint necessitates either accepting performance limitations or investing in increasingly powerful computing infrastructure, driving up both economic and environmental costs for organizations implementing AI APIs and SDKs.

Learning from Nature's Master Computer

The Human Brain's Efficiency Blueprint

The human brain represents the gold standard for computational efficiency, performing complex cognitive tasks while consuming only about 20 watts of power – less than a standard light bulb. This remarkable efficiency results from millions of years of evolutionary optimization, offering valuable lessons for AI system design. Unlike conventional computers that maintain constant activity levels, the brain operates on an event-driven principle where neurons activate only when necessary.

This sparse activation pattern contrasts sharply with traditional AI approaches, where all components compute continuously regardless of relevance. The brain's secret lies not in faster computation but in strategic computation avoidance – processing only essential information when needed. This biological inspiration drives research into neuromorphic computing architectures that could revolutionize how we approach AI model hosting and deployment.

Pseudo-Spiking: Bridging Biology and Technology

Pseudo-spiking techniques represent a practical compromise between biological accuracy and computational feasibility. Rather than perfectly replicating neural behavior on conventional hardware, these methods approximate the brain's sparse, event-driven computation patterns. This approach enables significant energy savings while maintaining compatibility with existing GPU infrastructure, allowing immediate implementation benefits without requiring specialized neuromorphic chips.

While some critics argue that pseudo-spiking simply repackages existing matrix multiplication techniques, the practical benefits demonstrate its value as an transitional technology. By enabling software innovation to progress independently of hardware development, pseudo-spiking accelerates the adoption of efficient computing paradigms. This approach particularly benefits developers working with AI agents and assistants that require continuous operation.

Advanced Spiking Coding Techniques

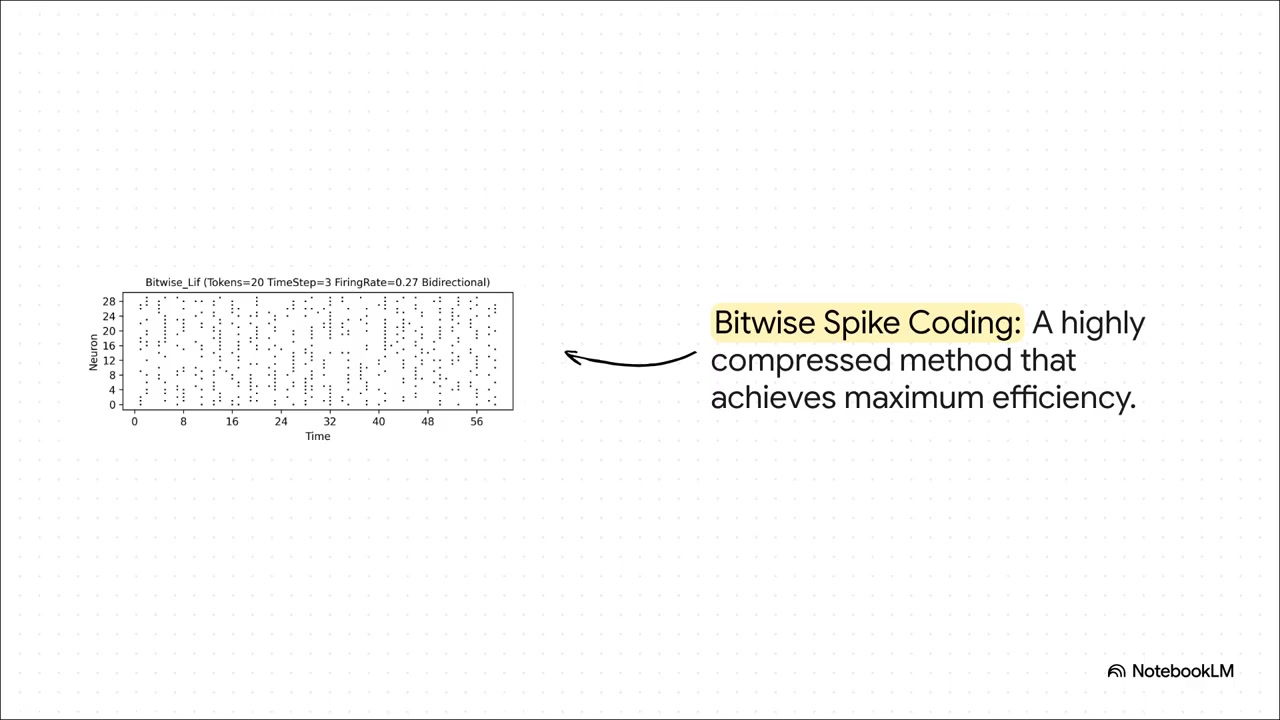

Spiking neural networks employ various coding strategies to optimize information transmission efficiency. Binary spike coding represents the simplest approach, using dense activation patterns to convey information over time. While straightforward to implement, this method proves relatively inefficient compared to more sophisticated alternatives that better mimic biological neural communication.

Ternary coding introduces inhibitory signals alongside excitatory ones, enabling more nuanced information representation with sparser activation patterns. This approach allows neural circuits to perform subtraction operations in addition to addition, creating more sophisticated computational capabilities. Bitwise coding represents the most advanced technique, achieving maximum efficiency through highly compressed spike patterns that pack substantial information into minimal neural activity.

SpikingBrain: Practical Efficiency Demonstration

The SpikingBrain model exemplifies the practical potential of brain-inspired computing, achieving remarkable efficiency gains through strategic computation skipping. Experimental results demonstrate that SpikingBrain avoids approximately 70% of potential calculations while maintaining competitive performance levels. More impressively, the model generates its initial output over 100 times faster than traditional systems when processing lengthy documents.

This performance challenges the conventional wisdom that efficiency improvements necessarily compromise intelligence or accuracy. SpikingBrain demonstrates that careful architectural design can deliver both speed and reliability, addressing concerns about the intelligence-speed tradeoff that often plagues optimized systems. Such advancements could significantly impact applications requiring system optimization and real-time processing.

Economic and Accessibility Implications

Beyond environmental benefits, brain-inspired computing promises substantial economic advantages by reducing the computational resources required for AI deployment. Lower energy consumption translates directly to reduced operational costs, making advanced AI capabilities accessible to smaller organizations and individual researchers. This democratization potential could unleash innovation waves as more diverse perspectives contribute to AI development.

Energy reduction estimates reach 97% for specific computational tasks, potentially transforming AI from an exclusive, resource-intensive technology into a widely accessible tool. This shift could decentralize AI development, reducing dependence on major technology corporations and fostering more diverse innovation ecosystems. Such developments align well with tools focused on power management and efficiency optimization.

Pros and Cons

Advantages

- Dramatic reduction in power consumption and environmental impact

- Increased accessibility through lower hardware requirements

- Potential for superior performance on complex cognitive tasks

- Compatibility with emerging neuromorphic hardware platforms

- Faster response times for real-time applications

- Reduced operational costs for continuous AI services

- Foundation for more biologically plausible AI systems

Disadvantages

- Implementation complexity requiring specialized expertise

- Current hardware limitations capping potential efficiency gains

- Unpredictable behavior patterns in some configurations

- Longer development cycles compared to traditional approaches

- Limited tooling and community support resources

Broad Applications Across Industries

Efficient AI technologies promise transformative impacts across multiple sectors by enabling deployment in resource-constrained environments. Healthcare could see AI-powered diagnostic tools reaching remote areas with limited electricity infrastructure. Environmental science might leverage efficient climate modeling that provides accurate predictions without excessive computational demands.

Additional application areas include financial analysis, educational tools, manufacturing optimization, and transportation systems – all benefiting from reduced operational costs and increased deployment flexibility. These advancements could work synergistically with system benchmarking tools to measure efficiency improvements accurately.

Conclusion

Brain-inspired computing represents a paradigm shift in artificial intelligence development, addressing the critical sustainability challenges facing current approaches. By learning from the human brain's exceptional efficiency, researchers are creating AI systems that deliver powerful capabilities without excessive resource consumption. While implementation challenges remain, the demonstrated benefits of models like SpikingBrain confirm the practical potential of this approach. As the field advances, brain-inspired AI promises to make artificial intelligence more accessible, sustainable, and capable – ultimately creating technology that serves humanity without compromising our environmental future.

Frequently Asked Questions

What is the main problem with current AI systems?

Current AI systems consume unsustainable amounts of energy and computational resources, with training large models using energy equivalent to multiple households annually. This environmental impact threatens continued AI progress and accessibility.

How efficient is the human brain compared to AI?

The human brain performs complex computations using only about 20 watts of power – less than a standard light bulb – while current AI systems require orders of magnitude more energy for similar tasks.

What are spiking neural networks?

Spiking neural networks mimic the brain's event-driven computation, where artificial neurons activate only when necessary rather than computing continuously. This sparse activation pattern dramatically reduces energy consumption.

How much energy can brain-inspired AI save?

Research shows brain-inspired approaches can reduce energy consumption by up to 97% for specific computational tasks while maintaining or improving performance compared to traditional AI systems.

What is SpikingBrain and how does it work?

SpikingBrain is an advanced AI model that implements brain-inspired computation, skipping approximately 70% of potential calculations while generating responses over 100 times faster than traditional systems for long documents.