Annotation

- Introduction

- Understanding Modern Software Testing

- Limitations of Traditional Testing Approaches

- AI's Strategic Role in Quality Assurance

- AI Technologies Reshaping Testing Methodologies

- Machine Learning Applications in QA

- Natural Language Processing in Testing Workflows

- Intelligent Automation Systems

- Generative AI Revolution in Testing Processes

- Automated Test Case Generation

- Intelligent Test Automation Scripting

- Pros and Cons

- Conclusion

- Frequently Asked Questions

AI in Software Testing 2025: Revolutionizing Quality Assurance with Automation

Discover how artificial intelligence is transforming software testing processes in 2025, enabling automated test case generation, self-healing

Introduction

Artificial Intelligence is fundamentally reshaping how software testing and quality assurance operate in 2025. As development cycles accelerate and applications grow more complex, traditional testing methods struggle to keep pace. AI-powered solutions are stepping in to automate repetitive tasks, enhance test coverage, and improve accuracy while reducing human error. This transformation is enabling development teams to deliver higher quality software faster than ever before, marking a significant evolution in the software testing landscape.

Understanding Modern Software Testing

Software testing represents the systematic process of evaluating software applications to ensure they meet specified requirements and function correctly under various conditions. This critical phase involves executing software components to identify defects, errors, or gaps between expected and actual behavior. The primary objectives include verifying reliability, usability, performance metrics, and overall user experience quality.

Contemporary software testing encompasses several crucial dimensions:

- Requirement Validation: Confirming the software aligns with business requirements and technical specifications that guided development

- Input Response Verification: Ensuring proper handling of valid, invalid, and edge-case inputs across all system interfaces

- Performance Benchmarking: Testing functionality within acceptable timeframes and resource consumption limits

- Environmental Compatibility: Verifying installation, operation, and usability across intended deployment environments

- Security Assessment: Identifying vulnerabilities and ensuring data protection mechanisms function correctly

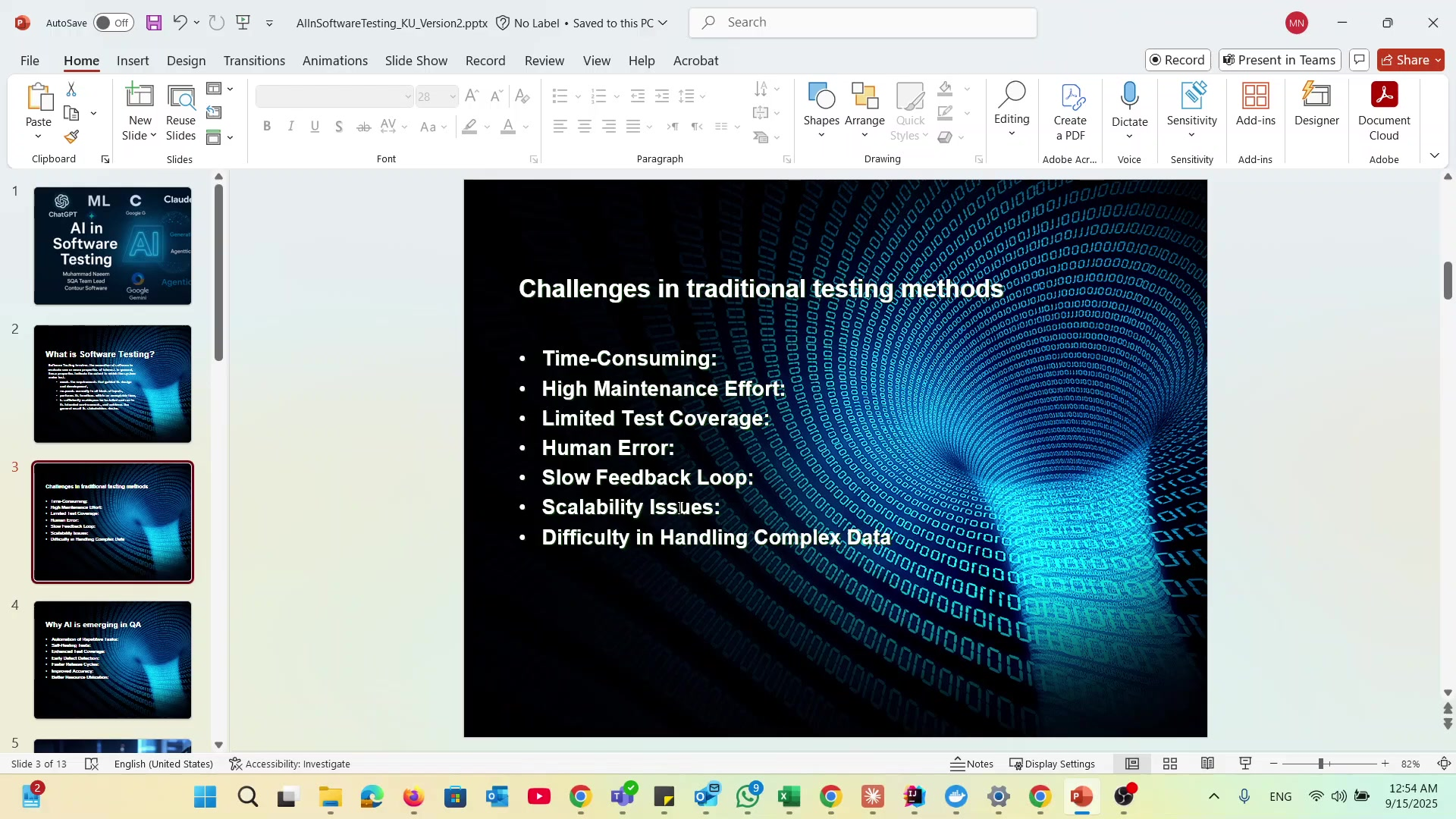

Limitations of Traditional Testing Approaches

Conventional software testing methodologies face numerous challenges that impact both efficiency and effectiveness. These limitations become particularly pronounced as applications scale in complexity and development teams adopt agile methodologies with rapid release cycles. Understanding these constraints helps contextualize why AI solutions are gaining traction in quality assurance workflows.

Key limitations include:

- Time-Intensive Processes: Manual testing requires substantial time investment, especially for complex enterprise applications with numerous integration points

- Maintenance Overhead: Test scripts and environments demand continuous updates as applications evolve, consuming significant resources

- Incomplete Test Coverage: Achieving comprehensive testing across all scenarios remains challenging, leaving potential defects undetected

- Human Error Factors: Manual testing introduces variability and oversight risks that automated systems can mitigate

- Delayed Feedback Cycles: Slow communication between testing and development teams prolongs issue resolution timelines

- Scalability Constraints: Expanding testing efforts to match application growth presents logistical and resource challenges

- Complex Data Management: Handling diverse test data scenarios becomes increasingly difficult as data volumes and varieties expand

AI's Strategic Role in Quality Assurance

Artificial Intelligence addresses traditional testing limitations through advanced automation and analytical capabilities. The integration of AI in quality assurance represents a paradigm shift from reactive testing to proactive quality engineering. This transformation enables organizations to detect issues earlier, reduce testing costs, and accelerate time-to-market while maintaining high quality standards.

AI delivers compelling advantages for modern software testing:

- Automated Task Execution: AI handles repetitive testing activities, freeing human testers for complex analysis and exploratory testing

- Adaptive Test Maintenance: Self-healing capabilities automatically adjust tests to accommodate application changes, reducing maintenance efforts

- Comprehensive Coverage: AI algorithms generate extensive test scenarios, improving coverage across functional and non-functional requirements

- Predictive Defect Identification: Machine learning models analyze code patterns to identify high-risk areas before testing begins

- Accelerated Release Cadence: Efficient testing automation enables faster deployment cycles without compromising quality

- Enhanced Accuracy: AI systems minimize human error, delivering more reliable and consistent test results

- Optimized Resource Allocation: Intelligent workflow management ensures testing resources focus on highest-impact areas

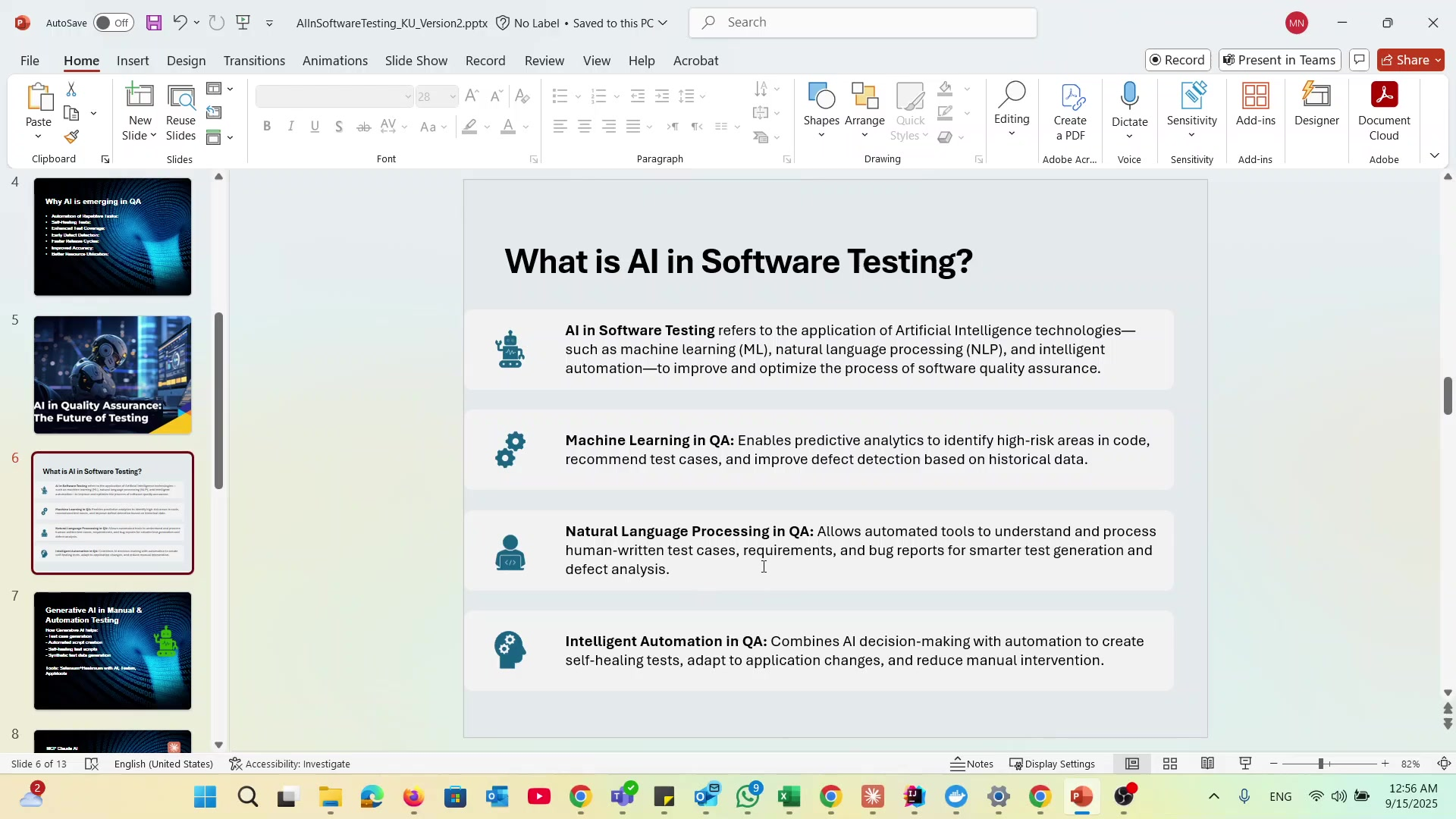

AI Technologies Reshaping Testing Methodologies

Machine Learning Applications in QA

Machine Learning, a core AI subset, enables predictive analytics that revolutionize testing prioritization and defect detection. ML algorithms analyze historical testing data, code patterns, and defect records to identify high-risk components and recommend optimal test strategies. This data-driven approach transforms testing from uniform coverage to risk-based prioritization, maximizing testing efficiency and effectiveness.

Machine Learning delivers significant value across multiple testing dimensions:

- Predictive Test Prioritization: Identifying defect-prone code areas to concentrate testing resources where they're most needed

- Intelligent Test Optimization: Recommending the most effective test cases based on historical success patterns and coverage gaps

- Automated Anomaly Detection: Recognizing unusual system behaviors or performance deviations that may indicate underlying defects

- Regression Test Selection: Determining which tests to execute based on code changes and historical defect patterns

- Test Flakiness Prediction: Identifying tests with inconsistent results to improve test suite reliability

Natural Language Processing in Testing Workflows

Natural Language Processing bridges the gap between human communication and automated testing systems. NLP technologies interpret requirements documents, user stories, and defect reports to generate relevant test cases and identify testing priorities. This capability significantly reduces the manual effort required to translate business requirements into executable test scenarios.

NLP applications enhance testing processes through:

- Automated Test Generation: Creating test cases directly from natural language requirements and user stories

- Requirements Analysis: Parsing and interpreting software specifications to ensure comprehensive test coverage

- Intelligent Bug Triage: Analyzing defect reports to categorize, prioritize, and route issues appropriately

- Test Documentation: Generating test plans, cases, and reports from natural language inputs

- Accessibility Testing: Evaluating user interface text for clarity, consistency, and compliance with accessibility standards

Intelligent Automation Systems

Intelligent Automation combines AI decision-making with robotic process automation to create adaptive testing systems that learn and improve over time. These systems automatically adjust to application changes, optimize test execution sequences, and reduce manual intervention requirements. The integration of AI with automation platforms creates testing environments that become more efficient with each execution cycle.

Intelligent Automation delivers transformative capabilities:

- Self-Healing Test Scripts: Automatically updating test automation scripts when application interfaces or behaviors change

- Dynamic Test Adaptation: Modifying test strategies based on real-time system feedback and environmental conditions

- Automated Workflow Optimization: Streamlining test execution sequences to minimize resource consumption and maximize coverage

- Predictive Maintenance: Identifying potential test environment issues before they impact testing activities

- Cross-Platform Testing: Adapting test execution across different devices, browsers, and operating systems

Generative AI Revolution in Testing Processes

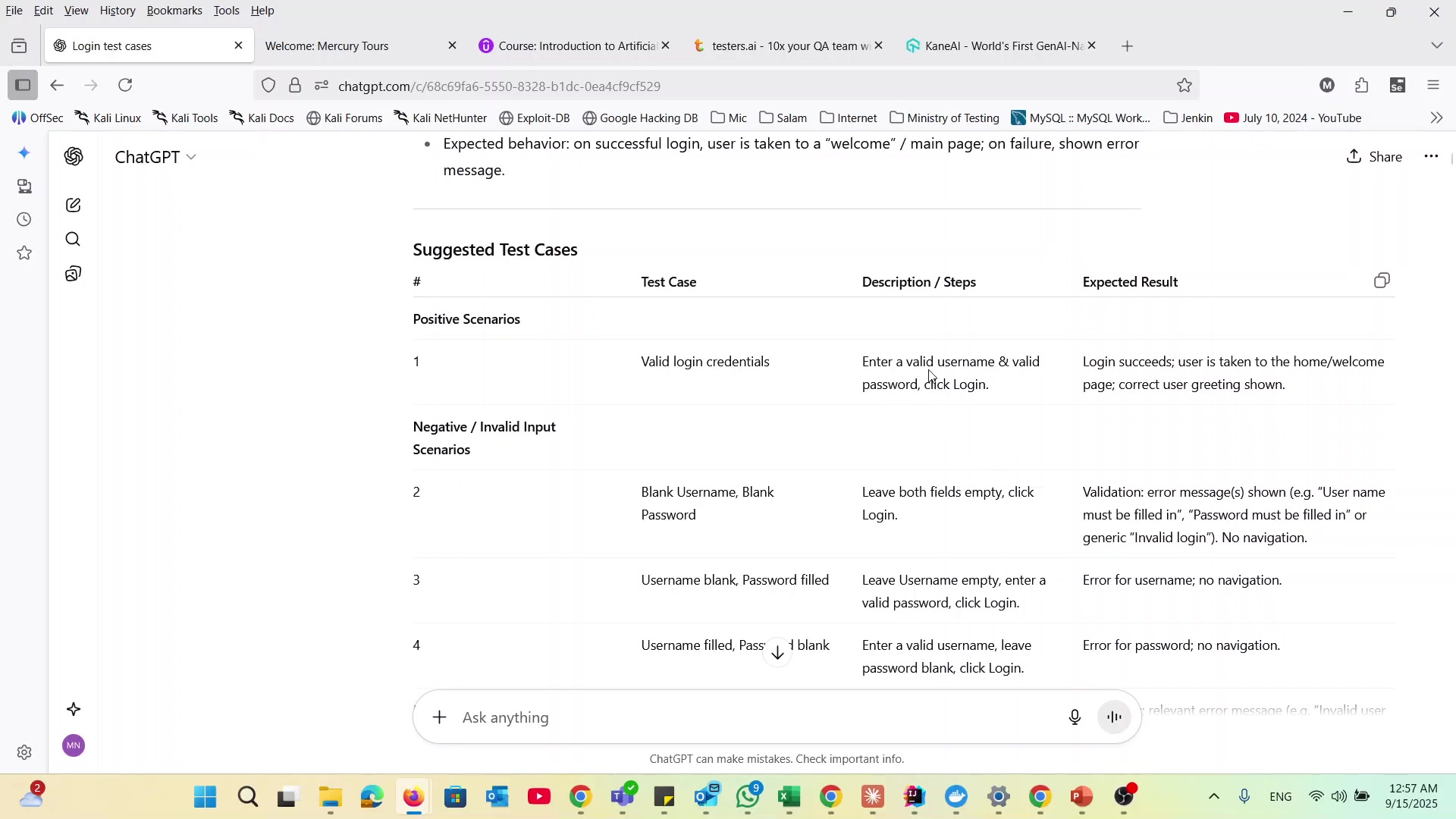

Automated Test Case Generation

Generative AI models like ChatGPT are transforming test case creation by automatically generating comprehensive test scenarios from requirements documentation. These systems analyze functional specifications, user stories, and acceptance criteria to produce detailed test cases covering positive, negative, and edge-case scenarios. The automation significantly reduces the time and effort required for test design while improving coverage consistency.

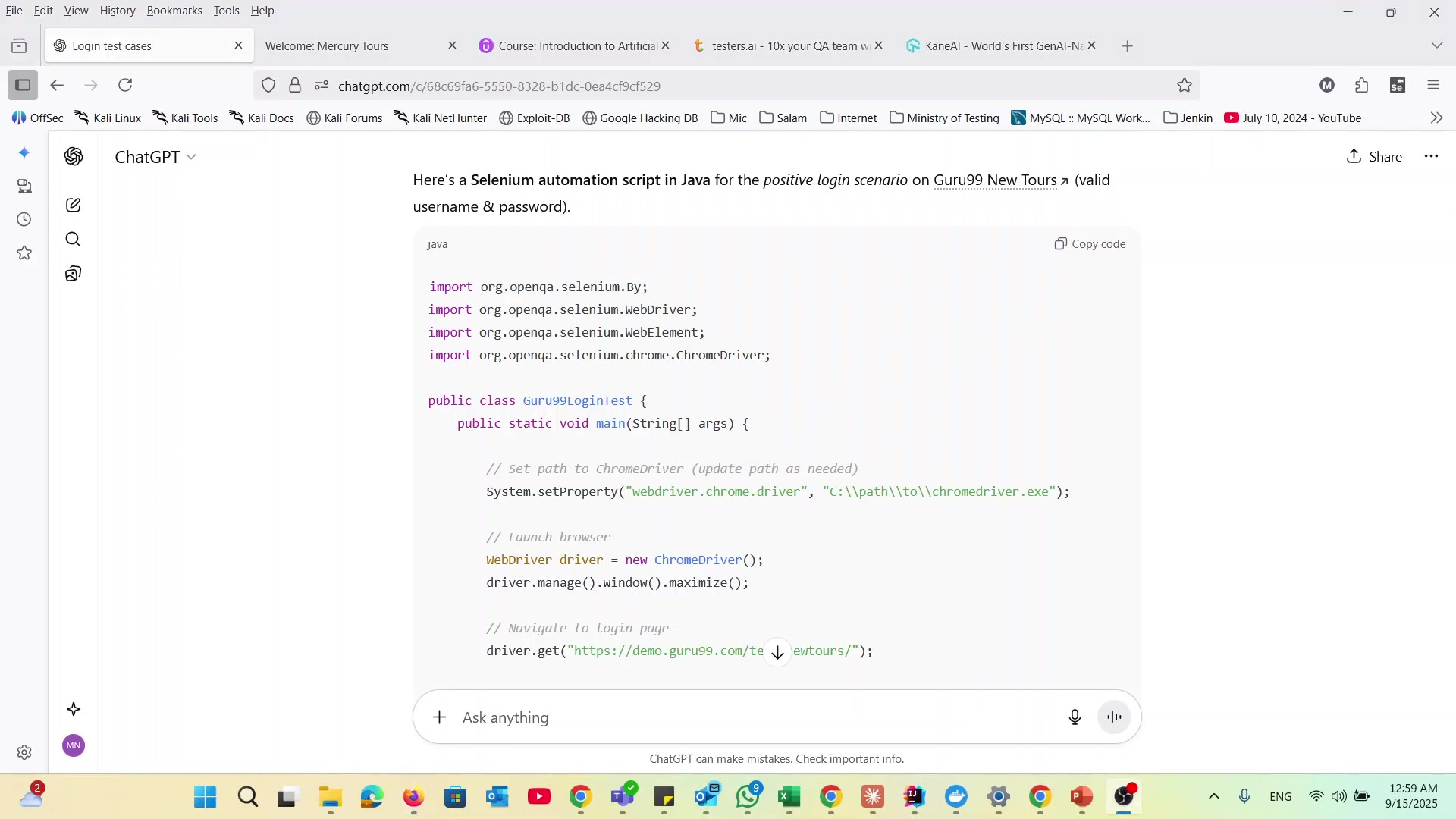

Intelligent Test Automation Scripting

Generative AI accelerates test automation by creating and maintaining Selenium and other automation framework scripts. These AI systems generate robust, maintainable test code that follows best practices and includes proper error handling. The automation extends beyond initial script creation to include ongoing maintenance as applications evolve.

The automated scripting process typically involves:

- Requirement Analysis: Understanding the testing objectives and application functionality to be automated

- Framework Selection: Choosing appropriate automation frameworks and tools based on application technology stack

- Script Generation: Creating executable test scripts with proper element locators, synchronization, and validation points

- Code Review: Evaluating generated scripts for maintainability, performance, and alignment with coding standards

- Execution Framework: Integrating scripts into continuous testing pipelines with proper reporting and failure analysis

- Maintenance Automation: Implementing self-healing mechanisms to automatically update scripts when application changes occur

Pros and Cons

Advantages

- Accelerates test case generation from requirements and specifications

- Automates creation and maintenance of test automation scripts

- Enables self-healing capabilities for test script adaptation

- Generates realistic synthetic test data for various scenarios

- Improves test coverage through comprehensive scenario generation

- Reduces manual effort in test design and documentation

- Enhances testing efficiency through intelligent optimization

Disadvantages

- Performance depends heavily on training data quality and relevance

- Generated tests may contain inaccuracies or incomplete coverage

- Requires ongoing maintenance as applications and AI models evolve

- Lacks human intuition for complex edge cases and creative testing

- Potential security concerns with sensitive test data generation

Conclusion

AI is fundamentally transforming software testing from a manual, reactive process to an intelligent, proactive quality engineering discipline. The integration of machine learning, natural language processing, and generative AI enables testing teams to achieve unprecedented levels of efficiency, coverage, and accuracy. While challenges remain regarding training data quality and maintenance requirements, the benefits significantly outweigh the limitations. As AI technologies continue to mature, they will increasingly become essential components of modern software development pipelines, enabling organizations to deliver higher quality software faster while optimizing resource utilization. The future of software testing lies in the strategic combination of human expertise and artificial intelligence capabilities.

Frequently Asked Questions

What is AI in software testing?

AI in software testing refers to applying artificial intelligence technologies like machine learning, natural language processing, and intelligent automation to enhance quality assurance processes. It automates repetitive tasks, improves test coverage, reduces human error, and enables predictive defect detection.

How does AI improve test accuracy?

AI improves test accuracy by minimizing human error through automated analysis of large datasets, pattern recognition, and anomaly detection. Machine learning algorithms learn from historical testing data to identify defects more effectively and consistently than manual methods.

What are self-healing tests in AI testing?

Self-healing tests automatically adapt to application changes by updating test scripts when UI elements, workflows, or functionality modifications occur. This reduces maintenance overhead and ensures test suites remain functional as applications evolve.

Can generative AI create complete test cases?

Yes, generative AI can create comprehensive test cases from requirements by analyzing specifications and generating scenarios covering normal operation, error conditions, and edge cases. However, human review is recommended to ensure completeness and accuracy.

How does AI reduce testing time and costs?

AI reduces testing time and costs by automating repetitive tasks, generating test cases rapidly, and minimizing manual effort, leading to faster release cycles and optimized resource utilization.