Annotation

- Introduction

- Understanding Zero-Shot Text Classification

- Traditional vs Zero-Shot Classification Approaches

- Hugging Face Transformers Library Overview

- Enhancing Zero-Shot Classification Performance

- Practical Implementation Guide

- Hugging Face Pricing and Licensing

- Pros and Cons

- Real-World Applications and Use Cases

- Conclusion

Zero-Shot Text Classification with Hugging Face: Complete Practical Guide

Zero-shot text classification with Hugging Face allows categorizing text without training data. This guide covers implementation, benefits, and code

Introduction

Traditional text classification has long relied on extensive labeled datasets, requiring significant manual effort and resources. However, zero-shot text classification represents a paradigm shift in natural language processing, enabling AI models to categorize text into predefined classes without any prior training on labeled examples. This revolutionary approach leverages the power of pre-trained language models from Hugging Face's Transformers library, making text classification accessible even when labeled data is unavailable. This comprehensive guide explores practical implementation, benefits, and real-world applications of zero-shot classification for developers and data scientists.

Understanding Zero-Shot Text Classification

What is Zero-Shot Text Classification?

Zero-shot text classification represents an advanced machine learning technique where models categorize text into classes they've never encountered during training. Unlike traditional approaches that require extensive labeled datasets, zero-shot classification leverages the semantic understanding capabilities of large language models. These models, trained on massive text corpora, develop sophisticated representations of language relationships, allowing them to generalize to new classification tasks without additional training. This capability is particularly valuable in dynamic environments where categories frequently change or when labeled data is scarce.

The underlying mechanism involves comparing the semantic similarity between input text and candidate labels using the model's pre-existing knowledge. When you provide a text sample and potential categories, the model evaluates how closely the text aligns with each label based on its understanding of language patterns and contextual relationships. This approach eliminates the need for time-consuming data labeling and model retraining, making it ideal for rapid prototyping and deployment in production environments.

Traditional vs Zero-Shot Classification Approaches

Traditional text classification follows a supervised learning paradigm, requiring carefully curated datasets where each text example is manually labeled with its corresponding category. This process involves collecting thousands of examples, annotating them with appropriate labels, and training a specialized model that learns to recognize patterns associated with each category. While effective, this approach demands substantial resources and becomes impractical when dealing with emerging topics or rapidly changing classification needs.

Zero-shot classification fundamentally differs by utilizing models that have already developed comprehensive language understanding through pre-training on diverse text sources. These models can infer relationships between new text and candidate labels without specific training on the target categories. The advantages extend beyond just eliminating data labeling – zero-shot classification offers remarkable flexibility, allowing you to instantly adapt to new classification schemes by simply modifying the candidate labels. This makes it particularly valuable for applications in AI chatbots and conversational AI tools where user queries may span diverse topics.

Hugging Face Transformers Library Overview

The Hugging Face Transformers library has emerged as the definitive resource for modern natural language processing, providing streamlined access to state-of-the-art pre-trained models. This comprehensive library abstracts away the complexities of model architecture and implementation, allowing developers to focus on solving practical problems rather than technical details. For zero-shot classification specifically, Hugging Face offers optimized versions of popular models like BERT, RoBERTa, and DistilBERT, each with distinct strengths and performance characteristics.

What makes the library particularly powerful is its intuitive pipeline interface, which enables complex NLP tasks with minimal code. The zero-shot classification pipeline handles all the underlying complexity, including tokenization, model inference, and result interpretation, delivering a clean, user-friendly API. This accessibility has democratized advanced NLP capabilities, making them available to developers without deep expertise in machine learning or transformer architectures. The library's compatibility with various AI APIs and SDKs further enhances its utility in production environments.

Enhancing Zero-Shot Classification Performance

Selecting Optimal Pre-trained Models

Choosing the right pre-trained model significantly impacts zero-shot classification accuracy and performance. Different models excel in various scenarios based on their training data, architecture, and intended use cases. BERT (Bidirectional Encoder Representations from Transformers) remains a popular choice for its robust performance across diverse text types, having been trained on Wikipedia and book corpus data. Its bidirectional attention mechanism allows it to understand context from both directions, making it particularly effective for nuanced classification tasks.

RoBERTa (Robustly Optimized BERT Pretraining Approach) represents an optimized version that removes BERT's next-sentence prediction objective and employs more extensive training with larger batches and longer sequences. These optimizations often result in superior performance for zero-shot tasks. For resource-constrained environments, DistilBERT offers a compelling alternative – this distilled version maintains approximately 97% of BERT's performance while being 40% smaller and 60% faster, making it ideal for applications requiring rapid inference or deployment on limited hardware.

Strategic Candidate Label Formulation

The quality and formulation of candidate labels directly influence classification accuracy in zero-shot scenarios. Effective labels should be descriptive, unambiguous, and semantically distinct from one another. Instead of using single-word categories like "sports," consider more descriptive phrases like "professional sports news" or "amateur athletic activities" that provide clearer semantic signals to the model. This specificity helps the model better understand the intended categorization boundaries and reduces confusion between similar concepts.

When dealing with hierarchical categorization systems, you can structure labels to reflect these relationships. For example, instead of using flat labels like "basketball" and "football," you might implement a hierarchical approach with "sports - basketball - NBA" and "sports - football - NFL." This structured labeling can improve accuracy by leveraging the model's understanding of category relationships. Additionally, consider including negative examples or out-of-scope labels when appropriate, as this helps the model better distinguish between relevant and irrelevant classifications for your specific use case.

Domain-Specific Fine-Tuning Strategies

While zero-shot classification works remarkably well out-of-the-box, performance can be further enhanced through domain-specific fine-tuning when specialized terminology or context is involved. Fine-tuning involves additional training on a small dataset relevant to your specific domain, allowing the model to adapt its understanding to specialized vocabulary and concepts. This approach is particularly valuable for technical domains like medical literature, legal documents, or scientific papers where standard language models may struggle with domain-specific terminology.

The fine-tuning process typically requires a modest dataset of labeled examples from your target domain – often just a few hundred samples can yield significant improvements. During fine-tuning, the model adjusts its parameters to better recognize patterns and relationships specific to your domain while retaining its general language understanding capabilities. This hybrid approach combines the flexibility of zero-shot classification with the precision of domain adaptation, making it ideal for specialized applications in AI automation platforms and enterprise systems.

Practical Implementation Guide

Environment Setup and Installation

Getting started with zero-shot classification requires minimal setup, thanks to Hugging Face's well-designed ecosystem. Begin by installing the necessary Python packages using pip. The transformers library provides the core functionality, while pandas offers convenient data manipulation capabilities for handling text datasets. For optimal performance, ensure you're using a Python environment with version 3.7 or higher, and consider setting up GPU acceleration if available, as this can significantly speed up inference for larger datasets.

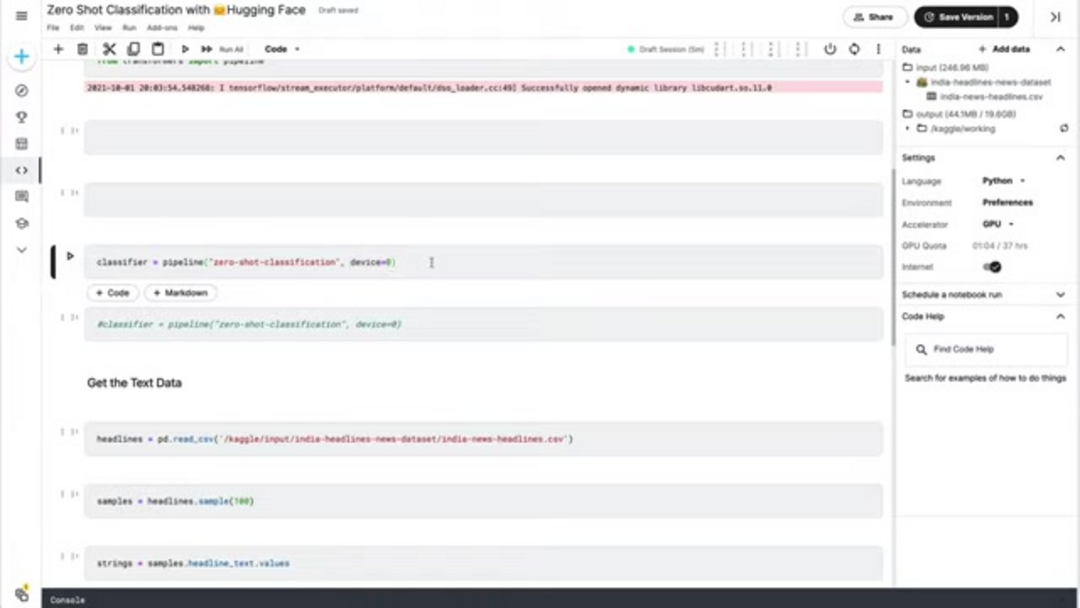

pip install transformers pandas torchAfter installation, import the necessary components in your Python script or notebook. The pipeline function from transformers provides the high-level interface for zero-shot classification, while pandas facilitates data loading and manipulation. If you have CUDA-compatible GPU available, PyTorch will automatically leverage it for accelerated computation, though CPU execution remains fully functional for smaller-scale applications.

from transformers import pipeline

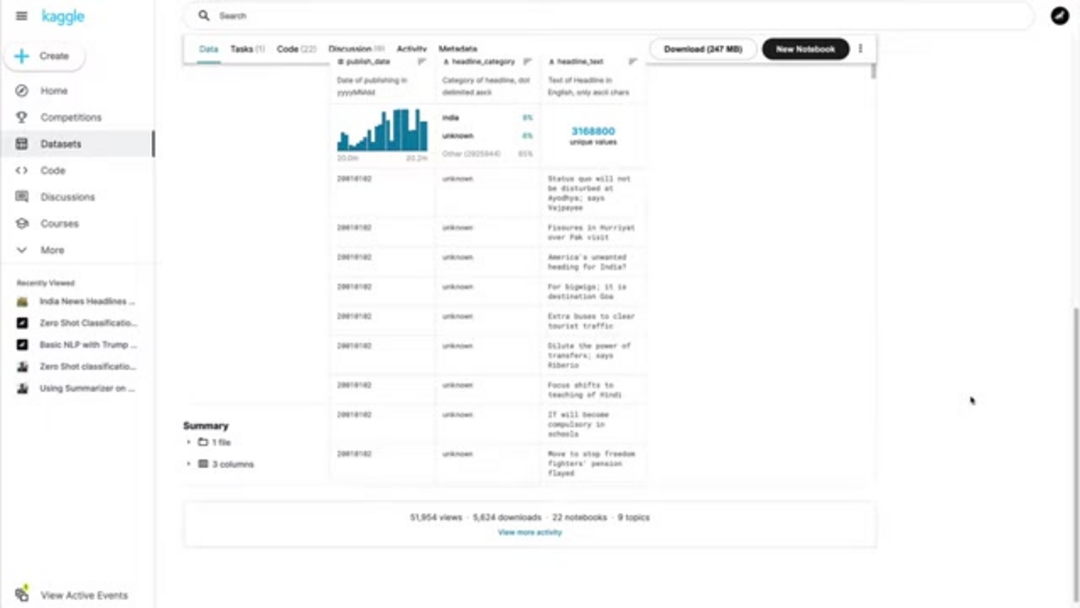

import pandas as pdData Preparation and Classifier Initialization

Proper data preparation is crucial for effective zero-shot classification. Begin by loading your text data from source files – common formats include CSV, JSON, or plain text files. For demonstration purposes, we'll assume a CSV file containing news headlines, but the approach generalizes to any text source. Ensure your text data is clean and properly formatted, as extraneous characters or formatting issues can impact classification accuracy.

# Load headline data from CSV

headlines_df = pd.read_csv('news_headlines.csv')

headline_samples = headlines_df['headline_text'].sample(100).tolist()Initialize the zero-shot classifier using Hugging Face's pipeline function. The device parameter allows you to specify whether to use CPU or GPU processing – setting device=0 enables the first available GPU for accelerated inference. The classifier automatically downloads and configures an appropriate pre-trained model, typically a version of BERT optimized for zero-shot tasks.

# Initialize classifier with GPU acceleration

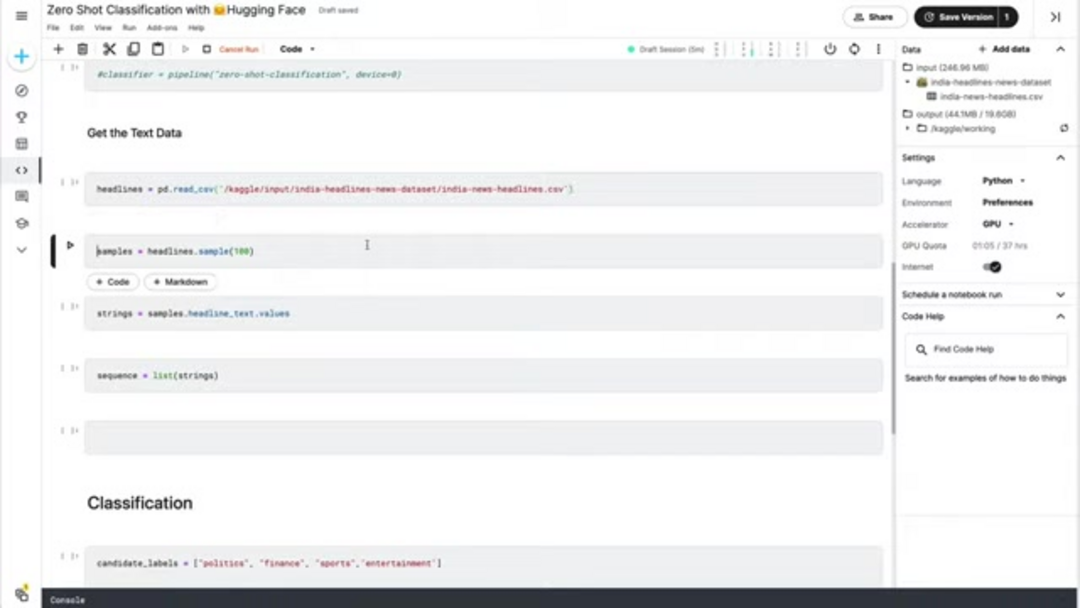

classifier = pipeline('zero-shot-classification', device=0)Classification Execution and Result Analysis

With your data prepared and classifier initialized, define your candidate labels based on the categories you want to identify. These labels represent the potential classifications for your text data. Choose labels that are mutually exclusive and comprehensively cover the expected content types in your dataset. For news categorization, typical labels might include politics, business, sports, entertainment, technology, and health.

candidate_labels = ['politics', 'business', 'sports', 'entertainment', 'technology', 'health']Execute the classification by passing your text samples and candidate labels to the classifier. The model returns probability scores for each label, indicating how strongly the text aligns with each category. You can process these results to assign the highest-probability label to each text sample or implement threshold-based filtering to exclude low-confidence classifications.

# Perform classification

classification_results = classifier(headline_samples, candidate_labels)

# Analyze and display results

for i, result in enumerate(classification_results):

top_label = result['labels'][0]

confidence = result['scores'][0]

print(f"Sample {i+1}: {headline_samples[i][:50]}...")

print(f"Predicted: {top_label} (confidence: {confidence:.3f})")

print("---")Hugging Face Pricing and Licensing

The core Hugging Face Transformers library and most pre-trained models are available under open-source licenses, primarily Apache 2.0, allowing free use for both research and commercial applications. This accessibility has been instrumental in the widespread adoption of transformer models across industries. The open-source nature enables developers to inspect, modify, and extend the codebase to meet specific requirements without licensing restrictions or costs.

For enterprise users requiring enhanced capabilities, Hugging Face offers premium services including accelerated inference APIs, dedicated expert support, and private model hosting. These services typically operate on subscription models with pricing based on usage volume and required features. The inference API provides optimized deployment infrastructure with guaranteed performance SLAs, while expert support offers direct access to Hugging Face's technical team for assistance with complex implementations and optimization challenges. These services are particularly valuable for organizations using AI model hosting solutions at scale.

Pros and Cons

Advantages

- Eliminates need for expensive and time-consuming data labeling

- Instantly adaptable to new categories without model retraining

- Rapid deployment capability for proof-of-concept and production

- Cost-effective solution for organizations with limited ML resources

- Excellent performance on general domain text classification

- Scalable across multiple languages and text types

- Continuous improvement as base models receive updates

Disadvantages

- Lower accuracy compared to supervised models with ample labeled data

- Performance variability across different domains and text types

- Dependence on quality and specificity of candidate labels

- Limited control over model behavior and decision boundaries

- Potential bias inherited from pre-training data sources

Real-World Applications and Use Cases

Zero-shot text classification finds applications across numerous industries and scenarios where rapid, flexible text categorization is valuable. In content management systems, it enables automatic tagging of articles, blog posts, and documents without manual intervention. News organizations leverage zero-shot classification to categorize incoming articles into topical sections, while e-commerce platforms use it to organize product reviews and customer feedback by theme or sentiment.

Customer service operations benefit from automatically routing support tickets and inquiries to appropriate departments based on content analysis. Social media platforms and online communities employ zero-shot classification for content moderation, identifying inappropriate material or categorizing user-generated content. Research institutions use the technique to organize academic papers and scientific literature by field or methodology. These applications demonstrate the versatility of zero-shot classification across AI agents and assistants and various business contexts.

Conclusion

Zero-shot text classification represents a significant advancement in natural language processing, democratizing access to powerful text categorization capabilities without the traditional requirement for labeled training data. By leveraging pre-trained models from Hugging Face's Transformers library, developers and organizations can quickly implement flexible classification systems that adapt to evolving needs. While the approach may not always match the precision of fully supervised methods with abundant labeled data, its flexibility, speed, and cost-effectiveness make it invaluable for numerous practical applications. As language models continue to improve, zero-shot classification capabilities will likely expand further, opening new possibilities for intelligent text processing across industries and use cases. For those exploring various AI solutions, comprehensive AI tool directories can provide additional context and alternatives.

Frequently Asked Questions

What types of text work best for zero-shot classification?

Zero-shot classification performs well with general domain text like news articles, product reviews, emails, and social media posts. Technical or highly specialized content may require domain adaptation for optimal results.

How many candidate labels should I use?

Use 4-8 well-defined, distinct labels for best performance. Too many unrelated labels can dilute results, while too few may not cover all relevant categories in your text data.

Does zero-shot always beat traditional classification?

No – with abundant, high-quality labeled data, supervised methods often achieve higher accuracy. Zero-shot excels when labeled data is scarce, categories change frequently, or rapid deployment is prioritized.

Can I improve zero-shot classification accuracy?

Yes – try different pre-trained models, refine candidate labels for clarity, use hierarchical labeling for complex categories, and consider domain-specific fine-tuning with limited labeled data when available.

How does zero-shot classification handle ambiguous text?

Zero-shot classification may struggle with ambiguous text, as it relies on semantic similarity. Using clearer candidate labels and context can help improve accuracy in such cases.