Annotation

- Introduction

- The 4 Steps to Transform Your AI Workflows in 2025

- Step 1: Selecting the Right AI Model for Your Needs

- Step 2: How to Never Write a Prompt Again

- Step 3: The Key to Lasting Long-Form AI Content Systems

- Step 4: Ensure Your Agent Is Actually Working Well With the Evaluation Feature

- Tools that You Can Use to Get The Results You Need to Fix Your AI Workflows

- Pros and Cons

- Conclusion

- Frequently Asked Questions

Master AI Workflows: 4-Step Guide for 2025 Success | ToolPicker

Master AI workflows with this 4-step guide covering model selection, prompt engineering, content systems, and evaluation for 2025 success.

Introduction

Artificial intelligence is revolutionizing how businesses operate, yet many organizations struggle to implement effective AI workflows that deliver consistent results. This comprehensive guide provides a structured four-step approach to mastering AI workflows in 2025, helping you select optimal models, build durable content systems, eliminate manual prompt writing, and implement robust evaluation processes for sustainable success.

The 4 Steps to Transform Your AI Workflows in 2025

Step 1: Selecting the Right AI Model for Your Needs

With hundreds of AI models available across various AI model hosting platforms, choosing the right one can feel overwhelming. The key is to avoid analysis paralysis by focusing on your specific requirements rather than chasing the latest model releases. Begin by identifying your primary metric: are you prioritizing output quality, cost efficiency, or processing speed? This foundational decision will immediately narrow your options and provide clear direction.

Leverage community wisdom through platforms like LM Arena, which aggregates user experiences across different AI tool directories. These resources provide valuable social proof, showing which models perform best for specific tasks. For instance, if you're building content generation workflows, you'll want to explore specialized AI writing tools that have proven successful for similar use cases.

Here's a practical approach to model selection:

- Define Your Leading Metric: Determine whether quality, cost, or speed takes precedence for your workflow. Quality-focused workflows might tolerate higher costs, while cost-sensitive operations may accept slightly lower quality outputs.

- Leverage Community Insights: Platforms like LM Arena provide real-world performance data across text generation, web development, and creative tasks. These insights help you avoid costly experimentation with untested models.

- Start Small and Scale: Begin with cost-effective models for initial testing, then gradually upgrade as you validate performance. This approach minimizes financial risk while building confidence in your AI implementation.

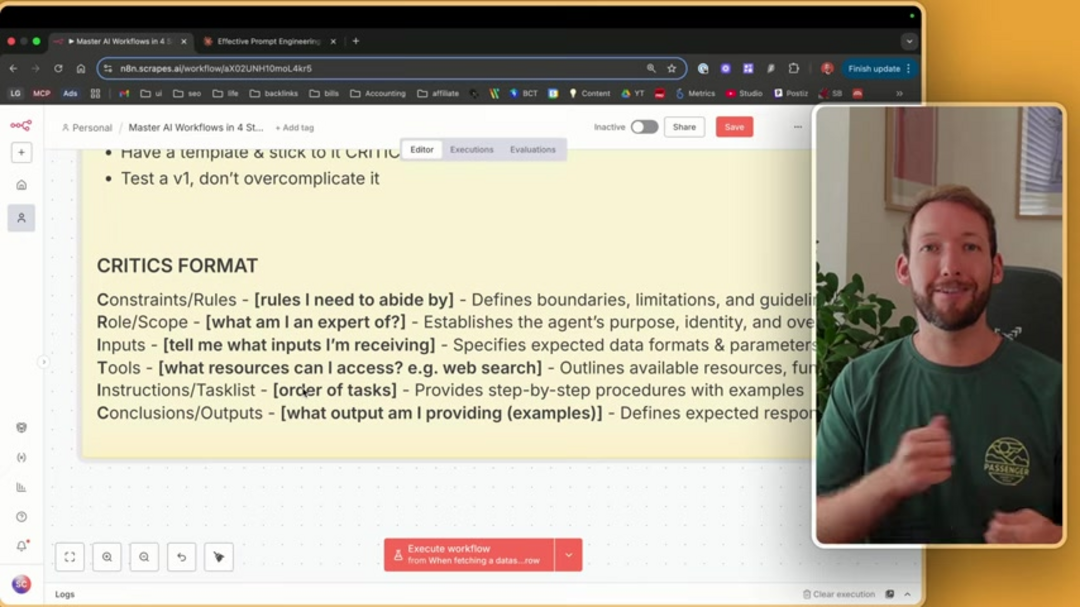

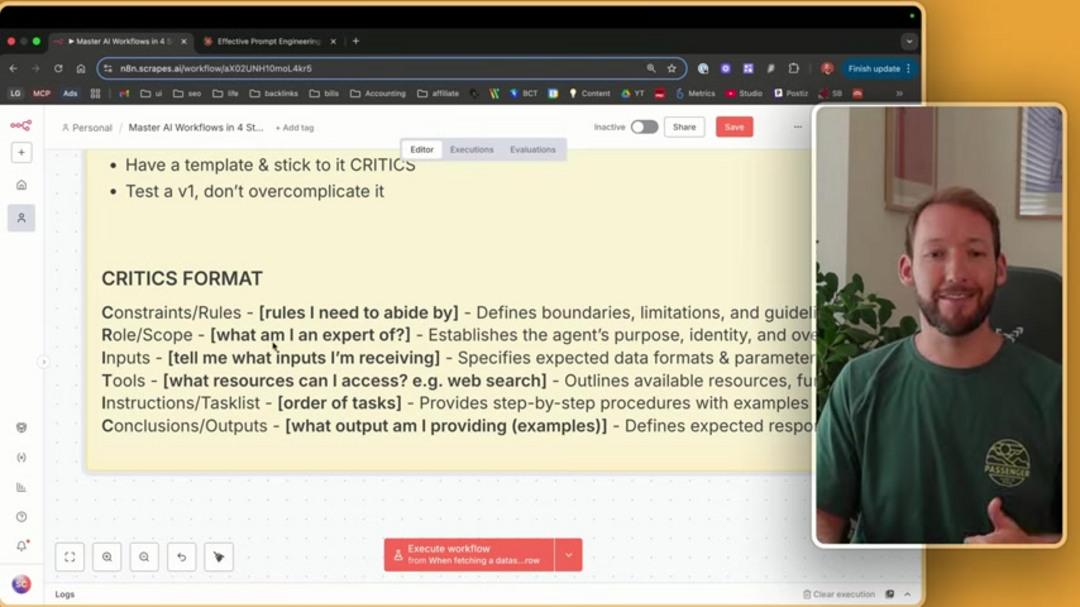

Step 2: How to Never Write a Prompt Again

Manual prompt writing consumes significant time and often produces inconsistent results. The solution lies in adopting structured frameworks like CRITICS, developed through collaboration between leading AI research organizations. This methodology transforms ad-hoc prompt creation into a systematic process that ensures consistent, high-quality outputs across all your AI prompt tools and interactions.

The CRITICS framework provides comprehensive guidance for AI interactions:

- Constraints/Rules: Establish clear boundaries and operational guidelines that the AI must follow, preventing unwanted deviations from your intended outcomes.

- Role/Scope: Define the AI's expertise domain and overall objectives, ensuring it operates within appropriate contextual boundaries.

- Inputs: Specify expected data formats, parameters, and provide examples to guide the AI's processing approach.

- Tools: Outline available resources like web search capabilities, database access, or specialized functions the AI can leverage.

- Instructions/Tasklist: Provide step-by-step guidance on model selection and function execution order for complex workflows.

- Conclusions/Outputs: Define expected response formats and deliverables with concrete examples of successful outputs.

- Solutions: Establish fallback procedures for when initial approaches don't yield expected results, ensuring workflow continuity.

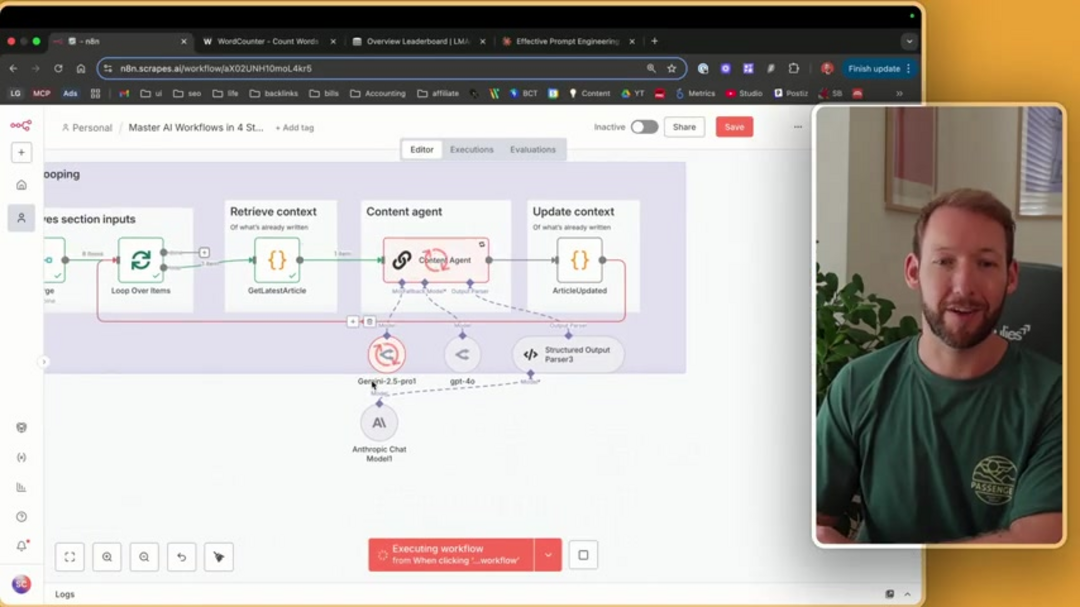

Step 3: The Key to Lasting Long-Form AI Content Systems

Many AI content systems fail when generating extensive documents because they lose contextual coherence across sections. Successful long-form content generation requires strategic segmentation and context management. Break large projects into manageable sections while maintaining narrative flow through careful context passing between segments.

Effective long-form content generation involves three critical components:

- Word Count Management: Set realistic section lengths that match your chosen AI model's context window capabilities, avoiding information truncation.

- Separate Structure and Writing Tasks: First generate a comprehensive outline, then flesh out each section individually while maintaining overall coherence.

- Context Preservation: Pass relevant context between sections to maintain consistency in tone, style, and factual accuracy throughout the document.

Even advanced models sometimes require guidance to maintain instruction adherence. Building checkpoints into your workflow ensures quality control without constant manual intervention. This approach is particularly valuable when working with AI agents and assistants that handle complex, multi-step content creation tasks.

Step 4: Ensure Your Agent Is Actually Working Well With the Evaluation Feature

Regular evaluation is the cornerstone of sustainable AI workflow success. Without systematic testing, you risk deploying underperforming systems that deliver inconsistent results. Implement comprehensive evaluation protocols that assess performance across diverse inputs and edge cases, ensuring reliability in real-world scenarios.

Key evaluation strategies include:

- Consistency Testing: Verify that identical inputs produce consistent outputs, identifying and addressing variability issues.

- Edge Case Analysis: Test your workflows with challenging inputs to understand performance boundaries and limitations.

- Confidence-Based Iteration: Make incremental changes while monitoring impact across all performance metrics, avoiding regression in previously functional areas.

Platforms like n8n provide built-in evaluation features that facilitate comprehensive testing. These tools help you compare performance metrics across different configurations, ensuring optimal workflow performance. When integrating with various AI automation platforms, consistent evaluation becomes even more critical for maintaining system reliability.

Tools that You Can Use to Get The Results You Need to Fix Your AI Workflows

The right tool selection can dramatically improve your AI workflow outcomes. Beyond the major platform providers, specialized tools address specific workflow challenges. LM Arena offers model comparison capabilities, while various AI chatbot platforms provide testing environments for conversational workflows. For developers building custom solutions, comprehensive AI APIs and SDKs enable seamless integration into existing systems.

Pros and Cons

Advantages

- Significantly reduces manual intervention in content creation

- Enables consistent output quality across all generated content

- Dramatically cuts down time spent on repetitive tasks

- Provides scalable solutions for growing content needs

- Offers cost-effective alternatives to human content creation

- Maintains brand voice consistency across all materials

- Allows rapid adaptation to changing content requirements

Disadvantages

- Requires initial setup time and technical understanding

- May produce generic content without proper customization

- Needs continuous monitoring and quality assurance

- Potential for context loss in complex, multi-step workflows

- Dependent on model availability and API reliability

Conclusion

Mastering AI workflows in 2025 requires a systematic approach that balances technological capabilities with practical implementation strategies. By following this four-step framework – strategic model selection, structured prompt engineering, robust content system design, and continuous evaluation – organizations can build sustainable AI workflows that deliver consistent value. The key lies in starting with clear objectives, leveraging community insights, and maintaining rigorous quality control throughout the implementation process. As AI technology continues to evolve, these foundational principles will ensure your workflows remain effective and adaptable to emerging opportunities.

Frequently Asked Questions

What is an AI workflow?

An AI workflow is a structured sequence of automated steps using artificial intelligence to complete specific tasks, combining data input, AI model execution, and output processing for efficient operations.

Why do AI workflows often fail to deliver results?

Common failures stem from incorrect model selection, poorly defined prompts, lack of contextual awareness, and insufficient evaluation processes that don't catch performance issues early.

What metrics matter most when choosing AI models?

Focus on your leading metric: output quality, cost efficiency, or processing speed. Your specific use case determines which metric takes priority in model selection.

How can I ensure consistent AI workflow performance?

Build durable systems with context retrieval, segment complex tasks, implement regular evaluation protocols, and maintain comprehensive testing across diverse inputs and edge cases.

What's the best way to stay updated on AI advancements?

Follow industry resources like LM Arena for model comparisons, monitor research publications from leading AI labs, and participate in community forums discussing practical implementations.