Annotation

- Introduction

- Understanding LLM Workflows

- What is a Workflow?

- Limitations of Traditional Automation

- The Rise of LLM Workflows for Enhanced Automation

- Common Design Patterns in LLM Workflows

- Large Language Model Driven Flows

- The Pitfalls of Not Defining a Solid Workflow

- How to Prevent High Computing Costs

- Implementing Your Own LLM Workflow

- Pros and Cons

- Conclusion

- Frequently Asked Questions

Mastering LLM Workflows: AI Automation & Agent Implementation Guide

Mastering LLM workflows enables efficient automation of complex tasks using AI agents, covering design patterns, implementation, and cost

Introduction

Large Language Model workflows represent the next evolution in automation, transforming how businesses handle complex tasks through intelligent AI systems. By combining structured processes with the creative capabilities of LLMs, organizations can automate everything from customer service to content creation while maintaining flexibility and scalability.

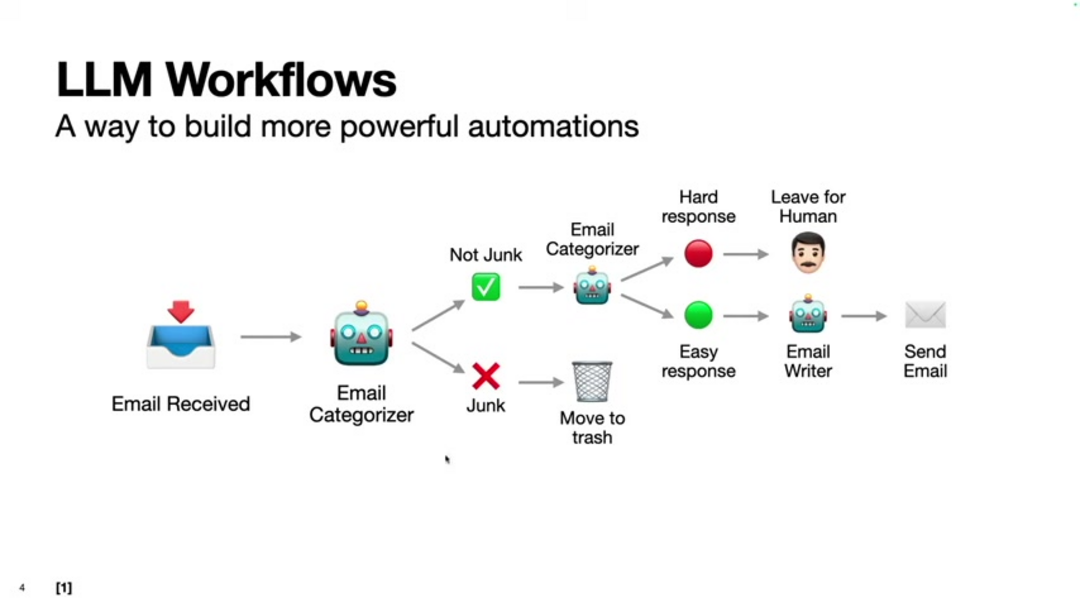

Understanding LLM Workflows

At their core, LLM workflows are systematic processes that leverage artificial intelligence to accomplish specific objectives through a sequence of well-defined steps. Unlike traditional automation that relies on rigid rules, these workflows incorporate the contextual understanding and generative capabilities of advanced language models.

What is a Workflow?

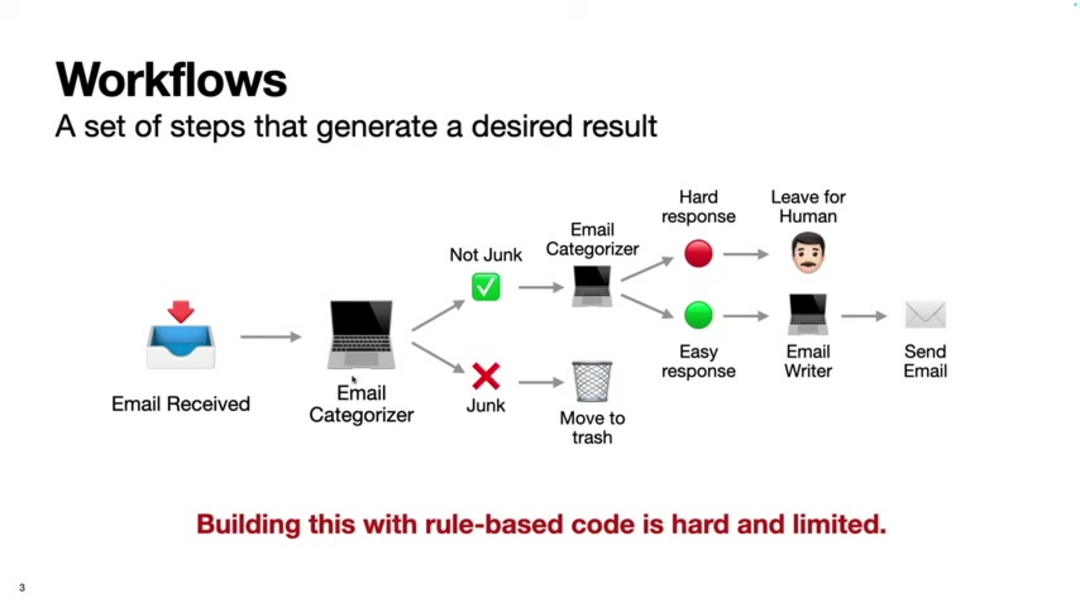

A workflow represents a structured series of actions designed to achieve a particular outcome efficiently. When properly defined, workflows enable consistent results while allowing for optimization and automation. The foundation of any effective workflow begins with clear objectives and progresses through logical steps that can involve human intervention, automated processes, or a combination of both.

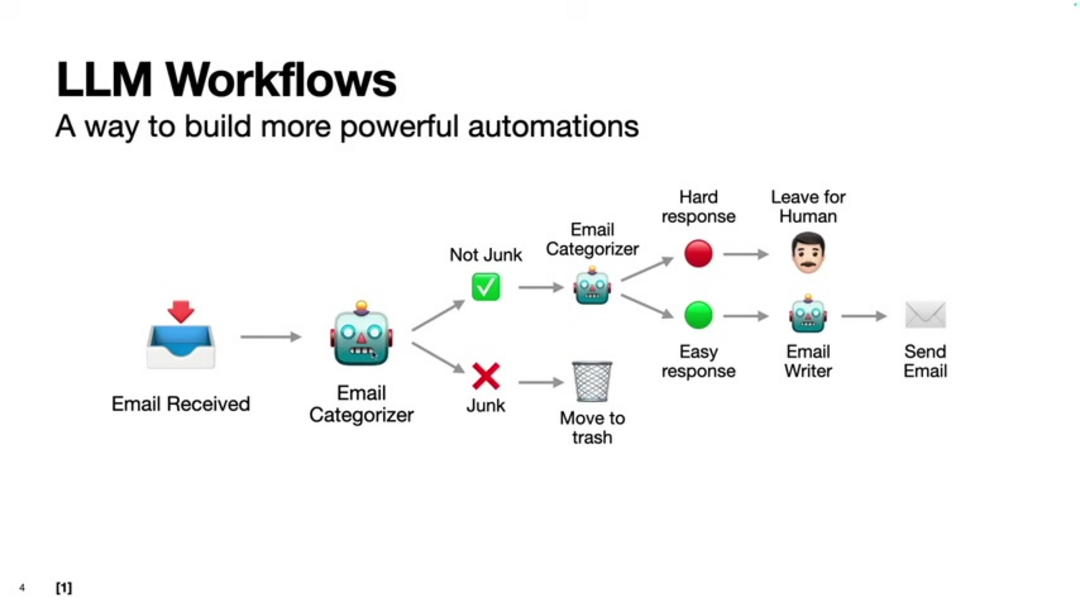

Consider the example of managing incoming emails: a basic workflow might involve receiving messages, analyzing content, making classification decisions, and taking appropriate actions. With AI email assistants, this process becomes automated while maintaining contextual awareness that traditional systems lack.

Key characteristics of effective workflows include goal orientation, sequential processing, and repeatability. Each workflow should begin with clearly defined objectives, follow logical steps in proper order, and produce consistent results when executed multiple times. This structured approach forms the foundation for implementing sophisticated LLM automation systems.

Limitations of Traditional Automation

Conventional automation systems often struggle with flexibility and adaptability due to their rule-based nature. These systems require explicit programming for every possible scenario, making them difficult to scale and maintain as complexity increases. Traditional approaches typically excel with structured data but falter when faced with unstructured information like natural language or complex documents.

The primary challenges include system rigidity that prevents adaptation to changing conditions, scalability issues as scenario complexity grows, inability to process unstructured data effectively, and high development and maintenance costs. For instance, a rule-based email classifier might identify spam based on specific keywords, but spammers can easily circumvent these rules by altering their tactics.

The Rise of LLM Workflows for Enhanced Automation

Large Language Models have revolutionized automation by introducing systems that can understand context, process unstructured data, and generate creative content. This technological advancement enables automation of tasks previously considered impossible for traditional systems, opening new possibilities across various industries and applications.

LLM workflows provide significant advantages including increased flexibility to handle diverse data types and changing conditions, improved scalability to manage large volumes of information, enhanced creative capabilities for content generation, and reduced development costs through pre-trained models. These benefits make LLM workflows particularly valuable for AI automation platforms that require both structure and adaptability.

Common Design Patterns in LLM Workflows

Several established patterns have emerged for structuring LLM workflows effectively. Understanding these patterns helps developers create robust and scalable AI systems that can handle complex tasks efficiently while maintaining control over the automation process.

Key design patterns include chaining multiple LLMs in sequence where each model's output feeds into the next, routing tasks to specialized agents based on input analysis, parallel processing where multiple LLMs work simultaneously on subtasks, orchestrator-worker architectures that coordinate specialized AI components, and evaluator-optimizer systems that refine outputs through iterative improvement. These patterns form the foundation for building sophisticated AI agents and assistants.

Large Language Model Driven Flows

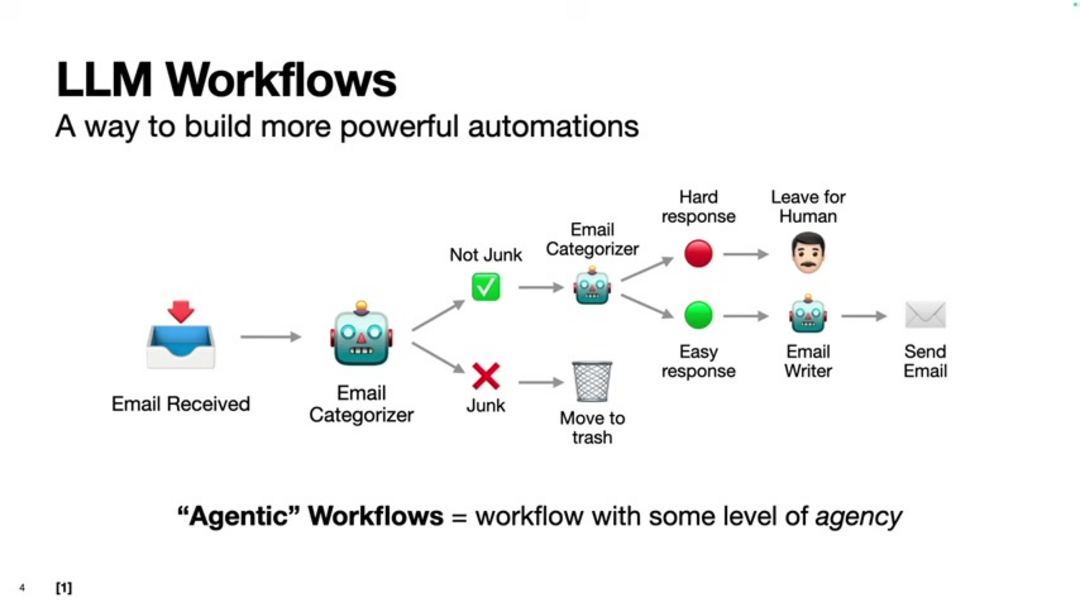

LLM-driven flows represent a paradigm shift from predetermined workflows to dynamic, context-aware systems. Instead of following fixed sequences, these flows allow the AI to determine appropriate next steps based on real-time analysis of the situation and available information.

The primary advantages include superior adaptability to changing conditions, enhanced contextual awareness for more intelligent decisions, and greater flexibility across diverse scenarios. Two prominent patterns within this category are orchestrator-worker systems where a central LLM coordinates specialized workers, and evaluator-optimizer setups where outputs are continuously assessed and refined. These approaches are particularly effective for conversational AI tools that require natural, context-aware interactions.

The Pitfalls of Not Defining a Solid Workflow

Without proper workflow design, LLM implementations can become inefficient and cost-prohibitive. As model complexity increases, computational requirements grow exponentially, making careful planning essential for practical deployment and sustainable operation.

Inefficient workflow design often leads to excessive computational costs, particularly when dealing with edge cases or long-tail scenarios. Proper planning helps identify where traditional code, machine learning, or LLM prompting provides the most efficient solution for each aspect of a system.

How to Prevent High Computing Costs

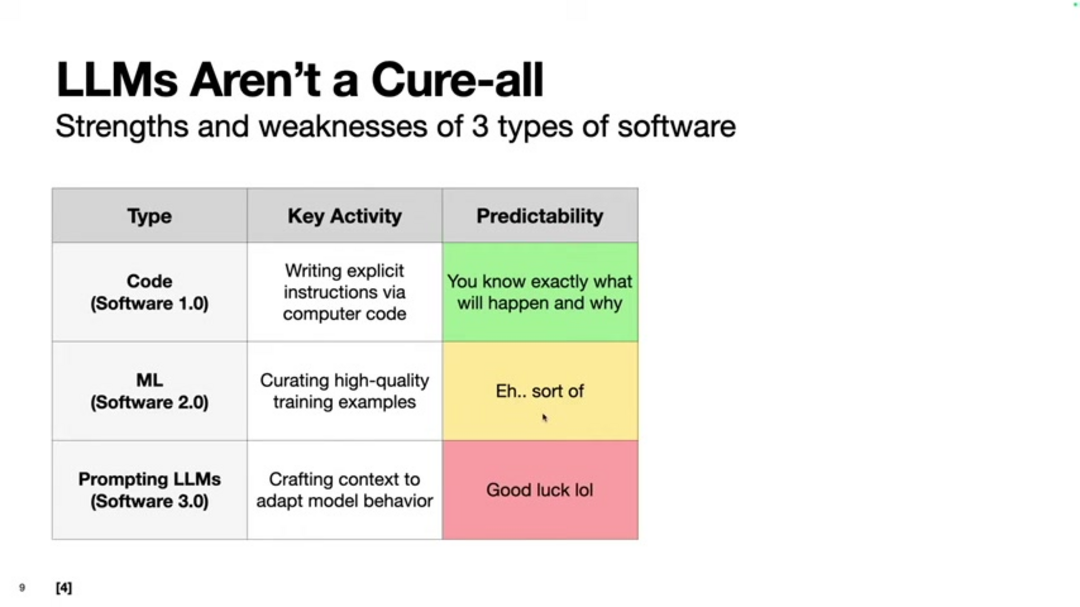

Understanding the strengths and limitations of different software approaches is crucial for cost-effective LLM implementation. Andrej Karpathy's framework distinguishes between traditional code (Software 1.0), machine learning systems (Software 2.0), and LLM prompting (Software 3.0), each with distinct characteristics and appropriate use cases.

By strategically combining these approaches, developers can create systems that leverage the predictability of traditional code where appropriate while utilizing LLMs for tasks requiring flexibility and creativity. This hybrid approach is fundamental for effective AI APIs and SDKs that balance performance with computational efficiency.

Implementing Your Own LLM Workflow

Getting started with LLM workflow implementation requires proper environment setup and understanding of available tools. The OpenAI Agent SDK provides a solid foundation for building sophisticated AI systems that can handle complex tasks through well-structured workflows.

Essential setup steps include installing Python and necessary libraries, obtaining API keys for LLM access, configuring environment variables securely, and creating instruction files that guide agent behavior. This foundation enables developers to build everything from simple automation scripts to complex AI chatbot systems with sophisticated workflow capabilities.

Pros and Cons

Advantages

- Exceptional flexibility handling unstructured data and changing conditions

- Easy scalability from small tasks to enterprise-level applications

- Advanced content creation capabilities for marketing and communications

- Reduced development and maintenance costs compared to custom systems

- Contextual understanding that improves with more data and usage

- Natural language interface that requires minimal technical expertise

- Continuous improvement through model updates and fine-tuning

Disadvantages

- Output unpredictability that challenges deterministic requirements

- High computational costs for complex models and large-scale deployment

- Potential confusion with edge cases and ambiguous inputs

- Limited control over specific outputs compared to traditional programming

- Dependency on API availability and potential service interruptions

Conclusion

LLM workflows represent a transformative approach to automation that combines the structure of traditional workflows with the flexibility and intelligence of advanced AI systems. By understanding design patterns, implementation strategies, and cost considerations, organizations can leverage these technologies to automate complex tasks while maintaining control and efficiency. As the field continues to evolve, mastering LLM workflows will become increasingly essential for businesses seeking competitive advantage through intelligent automation.

Frequently Asked Questions

What are the main benefits of using LLM workflows for automation?

LLM workflows offer superior flexibility handling unstructured data, easy scalability, reduced development costs, and contextual understanding that traditional automation lacks, enabling more intelligent and adaptive systems.

What is the difference between chain workflows and LLM-driven workflows?

Chain workflows use predefined sequences of LLMs, while LLM-driven workflows allow dynamic decision-making where the AI determines next steps based on context, providing greater adaptability and resilience.

How do I get started with OpenAI's Agent SDK?

Start by installing Python and the OpenAI Agent SDK via pip, obtain an API key, set up environment variables, and create instruction files to define agent behavior and tools for specific tasks.

What are the most common use cases for AI agents?

AI agents excel at email summarization, automated content creation, customer service chatbots, intelligent task management, data analysis, and complex decision-making processes requiring contextual understanding.

What are Software 1.0, 2.0, and 3.0 in AI context?

Software 1.0 refers to traditional code, 2.0 to machine learning systems, and 3.0 to LLM prompting, each offering different approaches for automation with varying flexibility and cost.