Annotation

- Introduction

- Unlocking AI-Powered Meeting Transcription

- The Challenge of Modern Meeting Documentation

- Introducing Whisper and Llama: The AI Power Duo

- Setup and Installation

- Transcription Configuration

- Summarization Techniques

- Cost Analysis and Accessibility

- Advanced Features and Customization

- Implementation Scenarios and Optimization

- Future Developments and Enhancements

- Pros and Cons

- Conclusion

- Frequently Asked Questions

AI Meeting Transcription: Whisper & Llama Setup Guide for Automated Notes

Learn how to set up AI-powered meeting transcription with Whisper and Llama to automatically convert audio into text and generate summaries, saving

Introduction

Struggling with hours of unstructured meeting recordings? This comprehensive guide demonstrates how to leverage OpenAI's Whisper and Meta's Llama to automatically transcribe and summarize meetings in any language. Transform your audio and video recordings into actionable insights with this powerful AI-driven solution that revolutionizes meeting documentation and collaboration workflows.

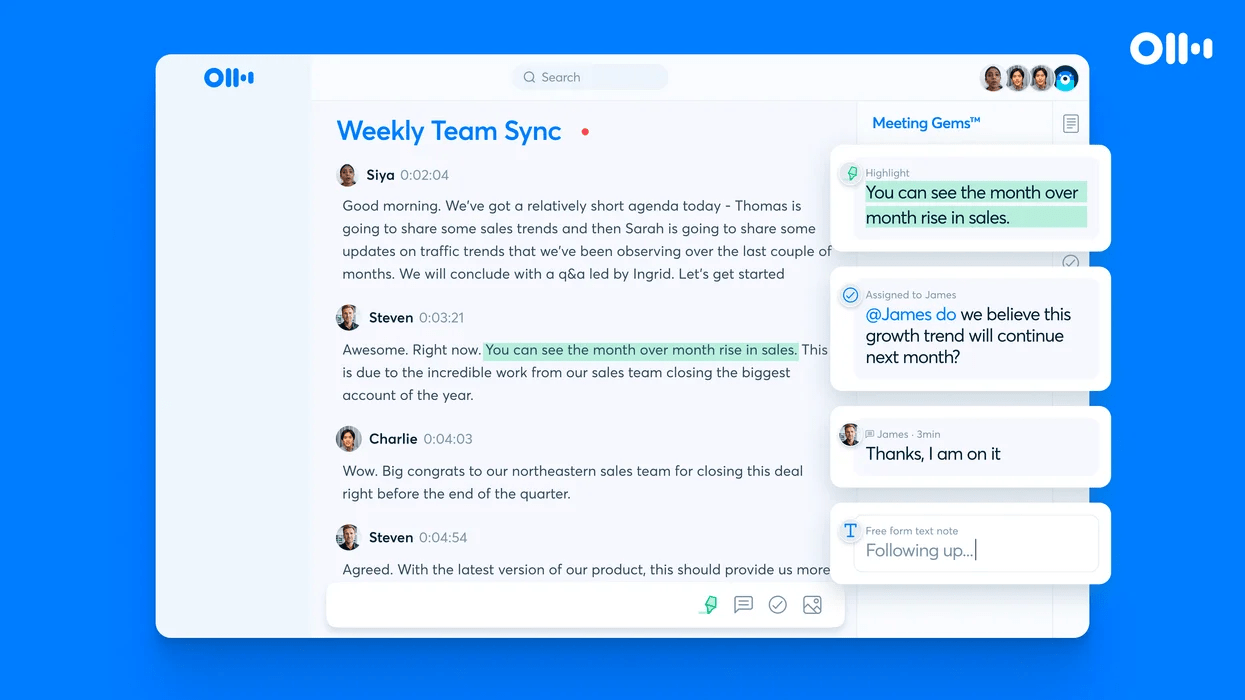

Unlocking AI-Powered Meeting Transcription

In today's fast-paced business environment, meetings remain essential for collaboration and decision-making across organizations. However, the challenge of managing lengthy, unstructured recordings often leads to missed insights and wasted productivity hours. Traditional manual transcription methods are not only time-consuming but also prone to human error and inconsistency. This guide introduces an automated approach using cutting-edge AI speech recognition technology that ensures accurate, consistent results while saving valuable time.

The Challenge of Modern Meeting Documentation

Modern teams face significant obstacles when dealing with meeting recordings. Manual transcription typically requires 4-6 hours for every hour of audio, creating substantial productivity bottlenecks. Additionally, extracting meaningful insights from raw transcripts demands additional analysis time. The solution presented here addresses these pain points through automated processing that maintains context while identifying key discussion points, action items, and decisions.

Introducing Whisper and Llama: The AI Power Duo

This system combines two complementary AI technologies: OpenAI's Whisper for speech-to-text conversion and Meta's Llama for intelligent summarization. Whisper represents a breakthrough in automatic transcription technology, supporting nearly 100 languages with remarkable accuracy. Meanwhile, Llama excels at understanding context and generating coherent summaries that capture essential meeting content. Together, they create an end-to-end solution that transforms raw audio into structured, actionable documentation.

Setup and Installation

Before implementing the transcription pipeline, proper environment configuration is essential. Begin by setting up a Python virtual environment to manage dependencies cleanly. The core requirements include PyTorch for model execution, Transformers for accessing pre-trained models, and additional utilities like tqdm for progress tracking. FFmpeg serves as the backbone for media file handling, enabling seamless conversion between audio and video formats to ensure compatibility with Whisper's input requirements. Installation varies by operating system, with Windows users needing to add FFmpeg to their system PATH, while macOS and Linux users typically use package managers.

Transcription Configuration

The transcription process begins with audio preparation, extracting tracks from video recordings using FFmpeg. Whisper processes audio through its neural network, dividing content into manageable 30-second segments with accurate timestamps for easy reference. Whisper offers multiple model sizes balancing speed and accuracy, from small for rapid processing to large for enhanced accuracy in complex discussions. It supports both transcription and translation modes, ideal for multilingual team environments.

Summarization Techniques

Following transcription, Llama processes the text to generate concise meeting summaries. The Llama 3.2 model with 3 billion parameters strikes an optimal balance between comprehension and computational needs, while the 1 billion parameter variant suits limited hardware. Summary quality depends on prompt engineering; customizable prompts like "Generate executive meeting minutes highlighting decisions and action items" guide output formats. Adding controlled randomness through temperature settings and token limits around 1000 words yields comprehensive yet concise summaries.

Cost Analysis and Accessibility

The Whisper-Llama combination offers exceptional value compared to commercial transcription services. Whisper operates completely free, while Llama's open-source nature eliminates licensing costs, making it attractive for startups, educational institutions, and organizations with frequent meeting documentation needs. The absence of per-minute charges or subscription fees enables unlimited usage within hardware constraints.

Advanced Features and Customization

The system's extensive language support makes it invaluable for international organizations, allowing meetings in native languages with standardized English summaries or original language transcripts. Beyond basic transcription, the pipeline offers customization points for different meeting types—technical reviews, client discussions, or internal brainstorming. Integration with broader automation platforms will enable more sophisticated meeting documentation workflows with minimal human intervention.

Implementation Scenarios and Optimization

Corporate teams can transform weekly strategy meetings into searchable archives with highlighted decisions. Educational institutions document lectures, legal professionals create deposition records, and healthcare organizations maintain patient notes. For processing numerous meetings, batch processing maximizes GPU utilization, audio preprocessing improves accuracy, and template libraries streamline prompt management. These strategies help scale the solution across departments and use cases.

Future Developments and Enhancements

The rapidly evolving AI landscape promises improvements in transcription accuracy and summarization quality. Emerging capabilities include speaker diarization, emotion detection, and automatic action item extraction. Integration with broader automation platforms will enable more sophisticated meeting documentation workflows with minimal human intervention.

Pros and Cons

Advantages

- Automates tedious manual transcription and summarization tasks

- Supports nearly 100 languages for global team collaboration

- Completely free solution with no recurring licensing costs

- Highly customizable through prompt engineering and parameters

- Generates timestamped transcripts for easy reference and navigation

- Adaptable to various hardware configurations and GPU capabilities

- Produces consistent, structured meeting documentation automatically

Disadvantages

- Potential for factual hallucinations common in large language models

- Requires technical setup and coding knowledge for implementation

- Processing speed depends heavily on available GPU resources

- Accuracy may vary with audio quality and speaker accents

- Limited real-time capabilities for live meeting transcription

Conclusion

The combination of OpenAI's Whisper and Meta's Llama creates a powerful, cost-effective solution for automated meeting transcription and summarization. This guide provides the complete technical foundation for implementing this AI-driven approach, from environment setup through optimization techniques. By adopting this system, organizations can significantly reduce manual documentation efforts while improving meeting insight accessibility and actionability across their teams.

Frequently Asked Questions

What AI models are used in this transcription system?

This system uses OpenAI's Whisper for speech-to-text transcription and Meta's Llama for intelligent meeting summarization. Whisper handles audio conversion to text, while Llama processes the transcripts into concise meeting minutes.

Is FFmpeg required for this setup?

Yes, FFmpeg is essential for media file processing. It converts video formats to audio and ensures compatibility with Whisper's input requirements. Installation guides are available for all major operating systems.

How can I improve the summary quality?

Summary quality improves through careful prompt engineering and parameter tuning. Customize prompts for specific meeting types, adjust temperature for variation, and set appropriate token limits. Experiment with different phrasing to optimize results.

Are there options for lower-end hardware?

Yes, both Whisper and Llama offer smaller model variants. Use Whisper's small model and Llama's 1 billion parameter version for faster processing on limited hardware, though with some accuracy trade-offs.

What languages does Whisper support?

Whisper supports nearly 100 languages, making it suitable for multilingual teams and global applications, with accurate transcription and translation capabilities for diverse meeting environments.

Relevant AI & Tech Trends articles

Stay up-to-date with the latest insights, tools, and innovations shaping the future of AI and technology.

Grok AI: Free Unlimited Video Generation from Text & Images | 2024 Guide

Grok AI offers free unlimited video generation from text and images, making professional video creation accessible to everyone without editing skills.

Grok 4 Fast Janitor AI Setup: Complete Unfiltered Roleplay Guide

Step-by-step guide to configuring Grok 4 Fast on Janitor AI for unrestricted roleplay, including API setup, privacy settings, and optimization tips

Top 3 Free AI Coding Extensions for VS Code 2025 - Boost Productivity

Discover the best free AI coding agent extensions for Visual Studio Code in 2025, including Gemini Code Assist, Tabnine, and Cline, to enhance your