Annotation

- Introduction

- Understanding QA's Evolving Role in AI Testing

- Large Language Models Explained for QA Professionals

- Essential Testing Areas for LLM Quality Assurance

- Practical Implementation of AI Testing Tools

- Real-World Applications and Use Cases

- Pros and Cons

- Conclusion

- Frequently Asked Questions

QA Engineers Guide to LLM Testing: AI Quality Assurance Strategies

Comprehensive guide for QA engineers on testing Large Language Models with strategies for prompt testing, automation frameworks, and bias detection

Introduction

As artificial intelligence transforms software development, Quality Assurance professionals face new challenges in testing Large Language Models. This comprehensive guide explores how QA engineers can adapt their skills to effectively evaluate AI systems without becoming machine learning experts. Learn practical strategies for prompt testing, automation frameworks, and bias detection that will keep your testing skills relevant in the AI era.

Understanding QA's Evolving Role in AI Testing

The Shift from Code Validation to AI Behavior Evaluation

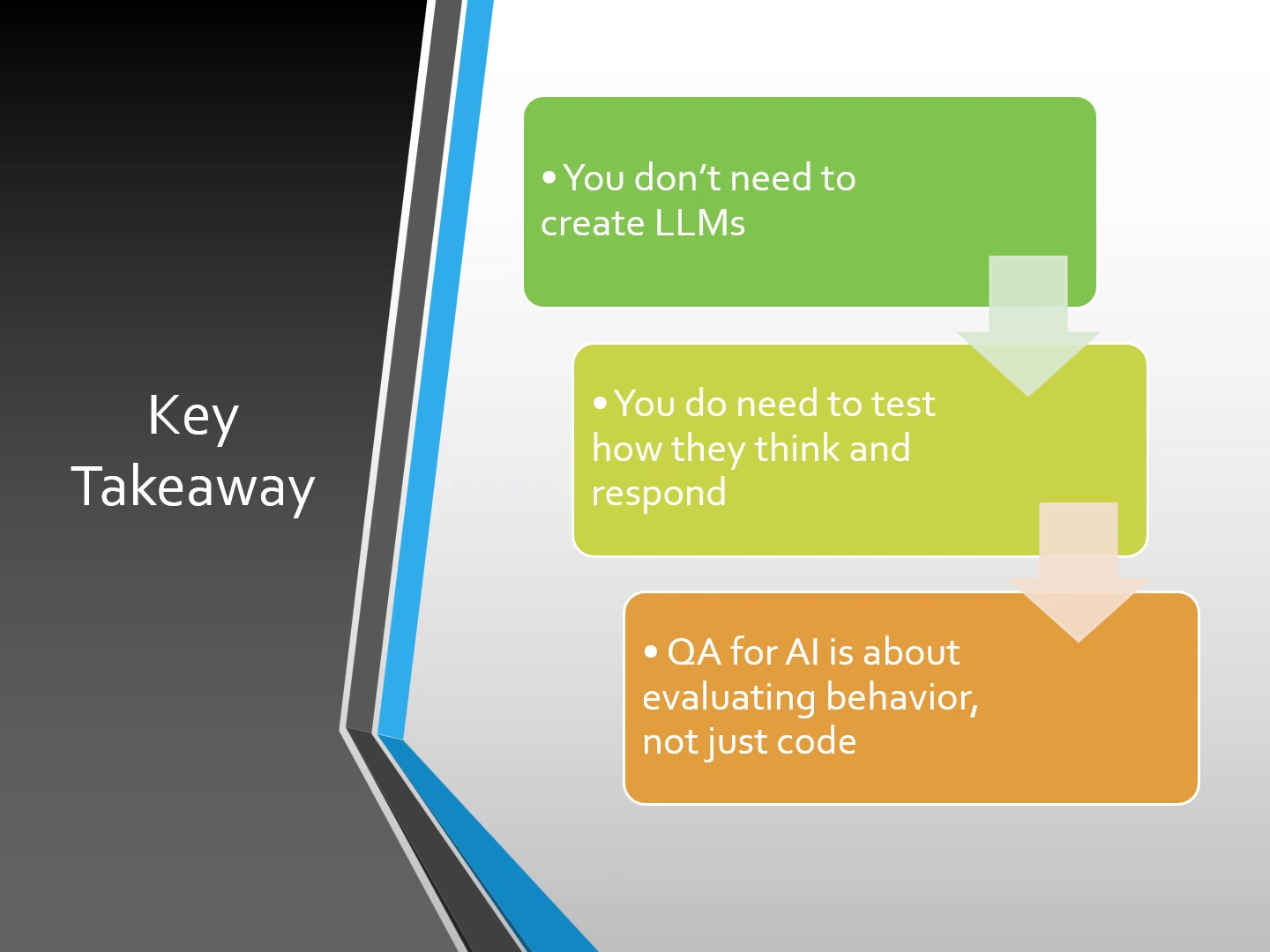

The emergence of sophisticated AI tools like ChatGPT and Google's Gemini has fundamentally changed what quality assurance means for modern applications. Rather than focusing exclusively on traditional code validation, QA engineers now need to evaluate how AI systems behave, respond, and adapt to various inputs. This represents a significant paradigm shift where testing artificial intelligence requires different methodologies than conventional software testing.

While some QA professionals worry about needing deep machine learning expertise, the reality is more nuanced. You don't need to understand the complex mathematics behind transformer architectures or gradient descent optimization. Instead, focus on comprehending how LLMs process information and generate responses. This practical approach allows you to identify potential issues without getting bogged down in technical complexities that are better handled by ML specialists.

The core principle for QA in AI testing is understanding that you're evaluating behavior rather than just verifying code outputs. This means developing test cases that examine how the model responds to edge cases, ambiguous prompts, and potentially biased inputs. Many organizations are finding success with specialized AI testing and QA tools that help bridge the gap between traditional testing and AI evaluation.

Large Language Models Explained for QA Professionals

What QA Engineers Need to Know About LLM Fundamentals

Large Language Models are AI systems trained on enormous datasets containing books, articles, websites, and other textual sources. These models learn patterns in human language that enable them to understand context, generate coherent responses, and adapt to specific instructions. For QA engineers, the most important concept is that LLMs don't "think" in the human sense – they predict the most likely next words based on their training data.

When you interact with an LLM through platforms like AI chatbots, you're providing a prompt that the model uses to generate a response. The quality and specificity of this prompt directly influence the output quality. QA engineers should understand basic concepts like tokens (the units of text the model processes), context windows (how much text the model can consider at once), and temperature settings (which control response creativity).

Key characteristics that affect QA testing include:

- Non-deterministic behavior: Unlike traditional software, LLMs may provide different responses to identical prompts

- Context sensitivity: Small changes in wording can produce dramatically different outputs

- Knowledge limitations: Models have cutoff dates and may not know recent information

- Hallucination risk: LLMs can generate plausible but incorrect information

Essential Testing Areas for LLM Quality Assurance

Comprehensive Prompt Testing Strategies

Prompt testing involves systematically evaluating how LLMs respond to different types of inputs. This goes beyond simple functional testing to examine how the model handles ambiguous requests, complex instructions, and edge cases. Effective prompt testing should include:

- Variety testing: Using different phrasing, styles, and formats for similar requests

- Boundary testing: Pushing the limits of what the model can handle effectively

- Adversarial testing: Attempting to trick or confuse the model with misleading prompts

- Context testing: Evaluating how well the model maintains context across multiple exchanges

Tools from AI prompt tools categories can help automate and scale this testing process.

Advanced Evaluation Metrics for AI Responses

Traditional pass/fail testing doesn't work well for LLM evaluation because responses exist on a spectrum of quality. QA engineers need to employ sophisticated metrics that measure:

- Accuracy: Factual correctness of the information provided

- Relevance: How well the response addresses the original prompt

- Coherence: Logical flow and readability of the generated text

- Safety: Absence of harmful, biased, or inappropriate content

- Completeness: Whether the response fully addresses the query

Automation Framework Implementation

Leveraging automation is crucial for efficient LLM testing. Popular frameworks like LangChain, PromptLayer, and OpenAI Evals provide structured approaches to creating, managing, and executing test suites. These tools help QA engineers:

- Create reproducible test scenarios with consistent evaluation criteria

- Scale testing across multiple model versions and configurations

- Track performance changes over time with detailed metrics

- Integrate AI testing into existing CI/CD pipelines

Many teams benefit from exploring AI automation platforms that offer comprehensive testing solutions.

Bias and Edge Case Detection

This critical area focuses on identifying and mitigating biases while ensuring the model performs reliably across diverse scenarios. Effective bias testing should examine:

- Demographic biases related to gender, ethnicity, age, or location

- Cultural assumptions that might exclude or misrepresent groups

- Political or ideological leaning in responses to controversial topics

- Performance variations across different languages and dialects

Practical Implementation of AI Testing Tools

Step-by-Step Guide to AI Testing Automation

Implementing effective AI testing requires a structured approach that balances automation with human oversight. Follow these steps to build a robust testing framework:

- Tool Selection: Choose automation tools that align with your specific testing needs and integrate well with your existing infrastructure. Consider factors like supported models, pricing, and learning curve.

- Test Suite Development: Create comprehensive test suites covering various prompt types, expected outputs, and evaluation criteria. Include both positive and negative test cases.

- Continuous Testing Integration: Incorporate AI testing into your regular development cycles, running automated tests with each model update or configuration change.

- Performance Monitoring: Establish baseline metrics and monitor for deviations that might indicate model degradation or new issues.

- User Feedback Integration: Incorporate real user interactions and feedback into your testing strategy to identify patterns and common failure points.

Platforms in the AI APIs and SDKs category often provide the building blocks for custom testing solutions.

Real-World Applications and Use Cases

Practical LLM Testing Scenarios Across Industries

LLM testing applies to numerous real-world applications where AI systems interact with users or process information. Common testing scenarios include:

- Customer Service Chatbots: Ensuring responses are accurate, helpful, and maintain appropriate tone across diverse customer queries and emotional states

- Content Generation Systems: Verifying that AI-generated articles, marketing copy, or social media posts are factually correct, original, and brand-appropriate

- Code Generation Tools: Testing that AI-assisted programming produces functional, secure, and efficient code across different languages and frameworks

- Translation Services: Validating accuracy, cultural appropriateness, and fluency in AI-powered translation across language pairs

- Educational Applications: Ensuring AI tutors provide correct information, appropriate explanations, and adaptive learning support

Many of these applications leverage conversational AI tools that require specialized testing approaches.

Pros and Cons

Advantages

- Enhanced ability to anticipate and identify AI model limitations

- Improved collaboration with machine learning engineering teams

- Increased value and relevance in AI-driven development projects

- More effective test design through understanding of model behavior

- Better career opportunities in the growing AI quality assurance field

- Ability to catch subtle issues that traditional testing might miss

- Stronger position for evaluating third-party AI integrations

Disadvantages

- Significant time investment required for learning new concepts

- Potential distraction from core QA responsibilities and skills

- Increased complexity in test planning and execution workflows

- Risk of over-focusing on technical AI details rather than user experience

- Additional tools and infrastructure requirements for proper testing

Conclusion

QA engineers don't need to become machine learning experts to effectively test Large Language Models, but they do need to adapt their approach to focus on AI behavior evaluation. By concentrating on prompt testing, evaluation metrics, automation tools, and bias detection, QA professionals can ensure AI systems are reliable, safe, and effective. The key is developing a practical understanding of how LLMs work rather than mastering their technical construction. As AI continues to transform software development, QA engineers who embrace these new testing methodologies will remain valuable contributors to quality assurance in the age of artificial intelligence.

Frequently Asked Questions

Do QA engineers need machine learning expertise to test LLMs?

No, QA engineers don't need deep ML expertise. Focus on understanding LLM behavior, prompt testing, evaluation metrics, and using automation tools rather than building models from scratch.

What are the key areas for QA engineers testing AI models?

The four critical areas are comprehensive prompt testing, advanced evaluation metrics, automation framework implementation, and systematic bias and edge case detection.

Which automation tools are most useful for LLM testing?

Popular tools include LangChain for workflow orchestration, PromptLayer for prompt management, and OpenAI Evals for standardized testing and evaluation metrics.

How does AI testing differ from traditional software testing?

AI testing focuses on evaluating behavior and responses rather than just code outputs, dealing with non-deterministic results and requiring different evaluation metrics.

What basic LLM concepts should QA engineers understand?

Understand tokens, prompts, context windows, temperature settings, and fine-tuning to better anticipate model behavior and identify potential issues.