Annotation

- Introduction

- Understanding Multilingual Large Language Models

- What Are Multilingual Large Language Models?

- Key Contributions of MLLM Research

- The Significance of Multilingual Language Models

- Recent Progress and Global Challenges

- Parameter Alignment Strategies in MLLMs

- Data Resources for MLLM Training

- Parameter-Tuning Alignment Methods

- Future Research Directions and Challenges

- Pros and Cons

- Conclusion

- Frequently Asked Questions

Multilingual Large Language Models: Complete Guide to Resources & Future Trends

Multilingual large language models (MLLMs) enable cross-lingual AI communication with comprehensive resources, taxonomy, and future trends for global

Introduction

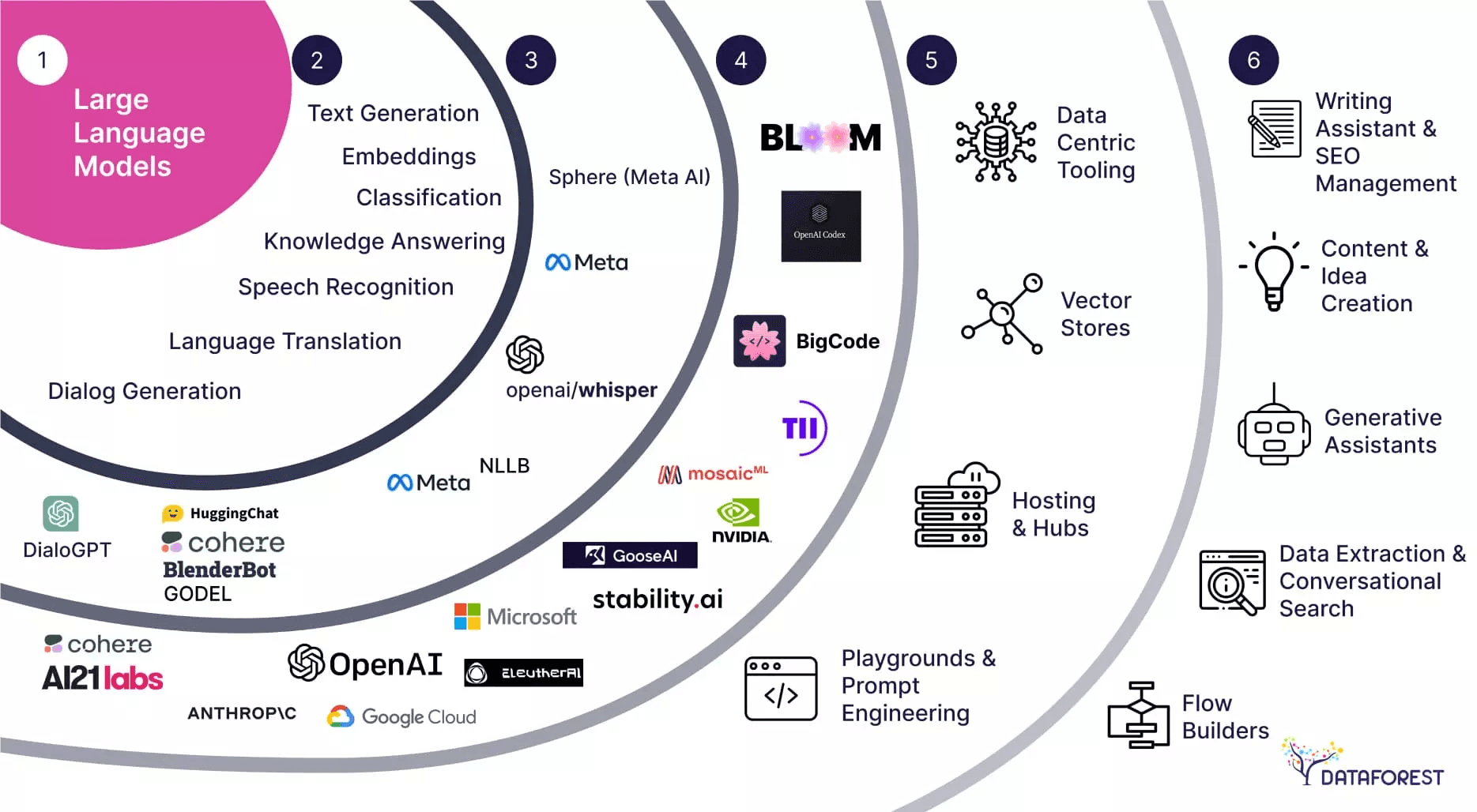

Multilingual Large Language Models (MLLMs) represent a transformative advancement in artificial intelligence, enabling seamless communication across diverse linguistic landscapes. These sophisticated AI systems can process, understand, and generate text in multiple languages simultaneously, breaking down traditional language barriers that have long hindered global collaboration and information exchange. As organizations increasingly operate across international boundaries, the demand for robust multilingual AI capabilities continues to grow exponentially.

Understanding Multilingual Large Language Models

What Are Multilingual Large Language Models?

Multilingual Large Language Models (MLLMs) constitute a significant evolutionary step beyond traditional monolingual AI systems. Unlike their single-language counterparts, MLLMs are specifically engineered to handle multiple languages within a unified architecture, enabling cross-lingual understanding and generation without requiring separate models for each language. This integrated approach allows for more efficient resource utilization and facilitates true multilingual capabilities that mirror human linguistic flexibility.

The core innovation of MLLMs lies in their ability to learn shared representations across languages, capturing linguistic patterns and semantic relationships that transcend individual language boundaries. This cross-lingual understanding enables applications ranging from real-time translation services to multinational customer support systems powered by advanced AI chatbots and conversational AI tools.

Key Contributions of MLLM Research

The groundbreaking survey paper "Multilingual Large Language Model: A Survey of Resources, Taxonomy and Frontiers" by Libo Qin and colleagues represents a milestone in multilingual AI research. This comprehensive work provides the first systematic examination of MLLM development, offering researchers and practitioners a unified framework for understanding this rapidly evolving field. The paper's significance extends beyond academic circles, providing practical insights for developers working with AI APIs and SDKs to implement multilingual capabilities.

Among its most valuable contributions is the collection of extensive open-source resources, including curated datasets, research papers, and performance benchmarks. This resource compilation addresses a critical gap in the field, providing developers with the tools needed to accelerate MLLM implementation and evaluation across diverse linguistic contexts.

The Significance of Multilingual Language Models

Recent Progress and Global Challenges

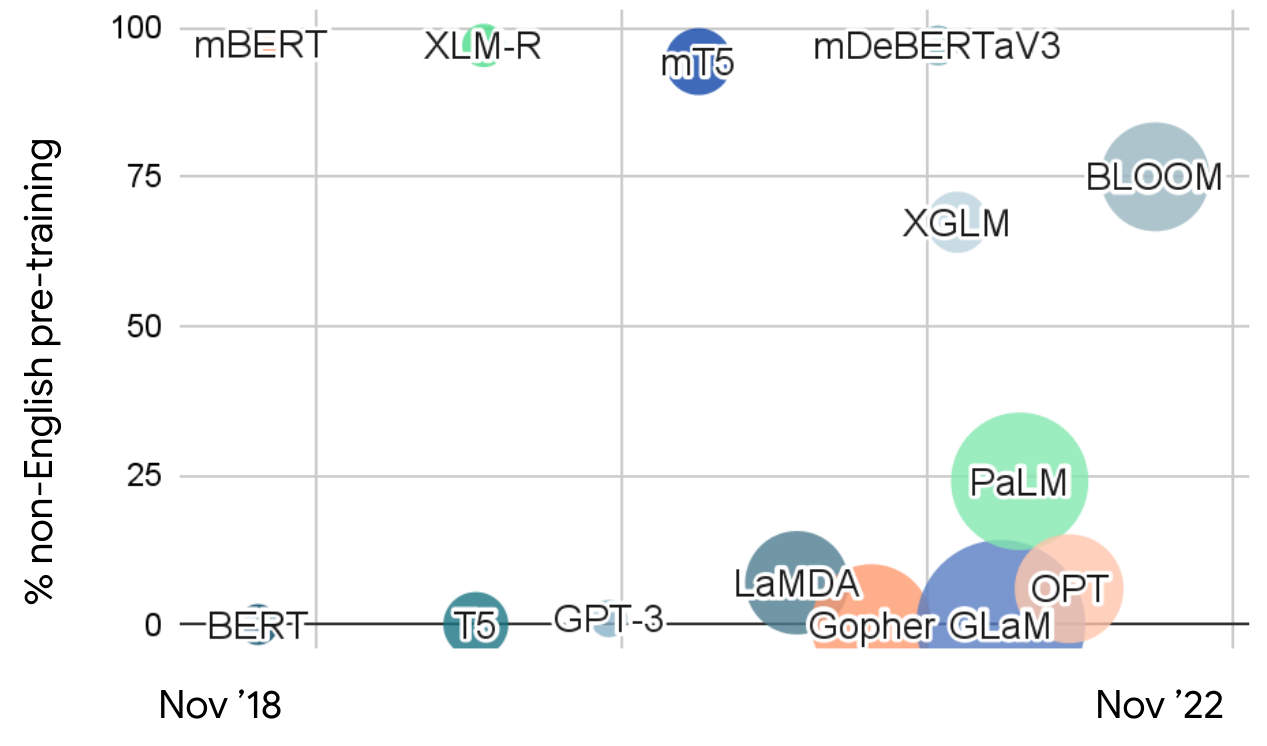

Recent advancements in large language models have demonstrated remarkable capabilities in natural language understanding and generation. However, the predominant focus on English-language training has created significant limitations for global deployment. With over 7,000 languages spoken worldwide and increasing globalization demands, the need for truly multilingual AI systems has never been more urgent. This gap is particularly pronounced in low-resource language scenarios, where limited training data and computational resources present substantial challenges.

The English-centric bias in most current LLMs creates disparities in AI accessibility and performance across different linguistic communities. MLLMs address this imbalance by providing more equitable language technology access, enabling organizations to deploy consistent AI capabilities across global markets through AI automation platforms and multilingual service systems.

Parameter Alignment Strategies in MLLMs

Parameter alignment represents a fundamental technical challenge in MLLM development, determining how effectively models can transfer knowledge and capabilities across languages. The two primary alignment methodologies – parameter-tuning and parameter-frozen approaches – offer distinct advantages and trade-offs for different deployment scenarios.

Parameter-tuning alignment involves actively adjusting model parameters during training to optimize cross-lingual performance. This method typically yields superior results but requires substantial computational resources and careful tuning to avoid overfitting or language interference. In contrast, parameter-frozen alignment leverages the model's existing capabilities through strategic prompting techniques, offering greater efficiency and faster deployment for organizations using AI agents and assistants.

Data Resources for MLLM Training

The effectiveness of multilingual models depends critically on the quality and diversity of training data across different linguistic stages. Pre-training data from models like GPT-3, mT5, and ERNIE 3.0 provides the foundational language understanding, while fine-tuning data from resources such as Flan-PaLM and BLOOMZ enables task-specific optimization. Reinforcement learning from human feedback (RLHF) data further refines model behavior based on human preferences, creating more natural and contextually appropriate multilingual interactions.

Each data category serves distinct purposes in the model development pipeline, with multilingual pre-training establishing broad linguistic capabilities and supervised fine-tuning specializing models for specific applications. The careful curation and balancing of these datasets across languages is essential for achieving robust cross-lingual performance, particularly for developers working with AI model hosting services.

Parameter-Tuning Alignment Methods

Parameter-tuning alignment employs a systematic, multi-stage approach to optimize MLLM performance across languages. The process begins with pre-training alignment, where models learn fundamental linguistic patterns from diverse multilingual datasets. This foundational stage establishes the model's basic cross-lingual capabilities and shared representations.

Supervised fine-tuning alignment then refines these capabilities for specific tasks and applications, incorporating instruction-formatted data to improve performance on targeted use cases. Reinforcement learning from human feedback alignment further enhances model behavior by incorporating human preferences and safety considerations. Finally, downstream fine-tuning alignment adapts models for specific deployment scenarios, with parameter-efficient techniques like LoRA (Low-Rank Adaptation) optimizing resource utilization.

Future Research Directions and Challenges

The continued evolution of MLLMs faces several critical research challenges that require focused attention. Multilingual hallucination detection and mitigation represents a particularly urgent area, as models must maintain accuracy and reliability across diverse linguistic contexts. Knowledge editing presents another significant challenge, requiring methods to continuously update and correct information across all supported languages while maintaining consistency.

Safety and fairness considerations extend beyond technical performance to encompass ethical deployment across global contexts. Establishing comprehensive safety benchmarks, developing effective unsafe content filtering mechanisms, and ensuring equitable performance across low-resource languages are essential for responsible MLLM development. These efforts align with broader initiatives in language learning technology and multilingual news applications that serve diverse global audiences.

Pros and Cons

Advantages

- Enables seamless communication across multiple languages simultaneously

- Facilitates cross-cultural understanding and global collaboration

- Supports development of international applications and services

- Improves accuracy in multilingual natural language processing tasks

- Reduces need for multiple single-language AI systems

- Enhances accessibility for non-English speaking populations

- Supports emerging markets and low-resource language communities

Disadvantages

- Requires extensive multilingual datasets and computational resources

- Challenging to optimize performance across all supported languages

- Potential for biases and inaccuracies in low-resource languages

- Complex safety and ethical considerations across cultures

- Higher development and maintenance costs than monolingual systems

Conclusion

Multilingual Large Language Models represent a pivotal advancement in artificial intelligence, offering unprecedented capabilities for cross-lingual communication and global collaboration. As research continues to address current limitations in hallucination mitigation, knowledge editing, and safety assurance, MLLMs will play an increasingly vital role in bridging linguistic divides and enabling more inclusive AI systems. The comprehensive taxonomy and resource compilation provided by recent surveys establishes a solid foundation for future innovation, guiding researchers and practitioners toward more effective and equitable multilingual AI solutions that serve diverse global communities.

Frequently Asked Questions

What are the main challenges in developing multilingual large language models?

Key challenges include handling over 7,000 languages with varying data availability, meeting globalization demands for cross-lingual communication, and addressing low-resource scenarios where data and computational resources are limited, particularly for underrepresented languages.

What are the primary advantages of using multilingual large language models?

MLLMs enable seamless communication across multiple languages, facilitate cross-cultural understanding, support global application development, improve multilingual NLP task accuracy, and reduce the need for maintaining separate single-language AI systems.

What future research areas are important for MLLM development?

Critical research areas include addressing multilingual hallucination issues, improving knowledge editing capabilities across languages, establishing comprehensive safety benchmarks, ensuring fairness in low-resource languages, and developing more efficient alignment strategies.

What are the key parameter alignment strategies in MLLMs?

MLLMs primarily use parameter-tuning and parameter-frozen alignment strategies. Parameter-tuning involves adjusting model parameters for cross-lingual optimization, while parameter-frozen leverages existing capabilities through prompting for greater efficiency.

How do MLLMs impact global AI accessibility and fairness?

MLLMs enhance global AI accessibility by providing equitable language technology across diverse communities, reducing English-centric biases, and supporting low-resource languages, thereby promoting fairness in AI deployment worldwide.

Relevant AI & Tech Trends articles

Stay up-to-date with the latest insights, tools, and innovations shaping the future of AI and technology.

Grok AI: Free Unlimited Video Generation from Text & Images | 2024 Guide

Grok AI offers free unlimited video generation from text and images, making professional video creation accessible to everyone without editing skills.

Grok 4 Fast Janitor AI Setup: Complete Unfiltered Roleplay Guide

Step-by-step guide to configuring Grok 4 Fast on Janitor AI for unrestricted roleplay, including API setup, privacy settings, and optimization tips

Top 3 Free AI Coding Extensions for VS Code 2025 - Boost Productivity

Discover the best free AI coding agent extensions for Visual Studio Code in 2025, including Gemini Code Assist, Tabnine, and Cline, to enhance your