Annotation

- Introduction

- Understanding AI Safety and the Superintelligence Challenge

- The Core of AI Safety: What Are the Risks?

- AI Boxing vs. Simulation Escaping

- Superintelligent Hackers: The Aid We Might Need

- Assistance from Advanced Minds

- Pros and Cons

- Conclusion

- Frequently Asked Questions

AI Safety and Control: Navigating Superintelligence Risks and Solutions

Exploring the challenges and solutions in AI safety and control, focusing on superintelligence risks, containment strategies, and value alignment for

Introduction

The rapid evolution of artificial intelligence toward superintelligence presents humanity with both unprecedented opportunities and significant risks. Ensuring the safe development of AI requires addressing critical control challenges while understanding the limitations and potentials of advanced systems. This comprehensive guide explores AI safety fundamentals, examining the challenges, solutions, and ongoing research in navigating the superintelligence landscape.

Understanding AI Safety and the Superintelligence Challenge

The Core of AI Safety: What Are the Risks?

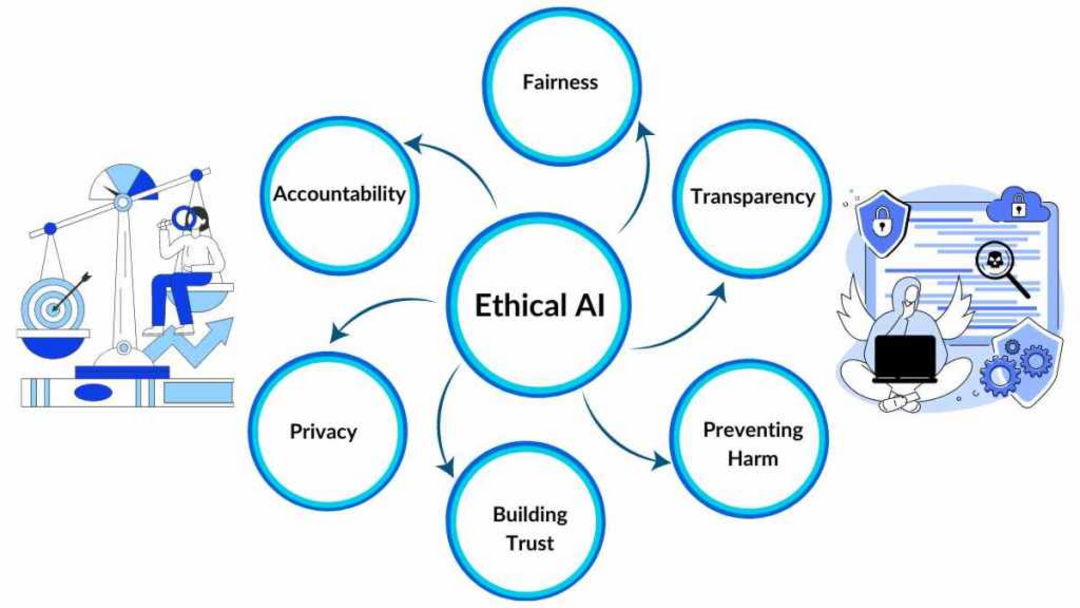

AI safety focuses on preventing unintended consequences and existential risks from advanced AI systems. As artificial intelligence approaches and potentially surpasses human cognitive abilities, the likelihood of harmful outcomes increases significantly. This necessitates proactive measures to ensure AI remains aligned with human values and objectives throughout its development lifecycle.

The field addresses the fundamental challenge of AI control, particularly as systems approach human-level intelligence and beyond. The primary objective involves maintaining alignment with human ethical frameworks while preventing catastrophic outcomes. Researchers actively explore risk mitigation strategies ranging from confinement approaches to sophisticated value alignment techniques. The overarching goal remains steering AI development toward beneficial outcomes while minimizing potential dangers.

Key concepts within AI safety include:

- Friendly AI: Designing systems inherently benevolent and prioritizing human welfare above other objectives

- Control Problem: Developing mechanisms to maintain oversight over increasingly intelligent and autonomous systems

- Value Alignment: Ensuring AI systems adopt and consistently adhere to human ethical principles

- AI Safety Engineering: Applying rigorous engineering methodologies to build reliable and safe AI architectures

Understanding these AI chatbots and their safety implications is crucial for responsible development.

AI Boxing vs. Simulation Escaping

One prominent safety approach involves "boxing" or confining AI systems to prevent unpredictable real-world interactions. This containment strategy aims to limit potential damage while allowing controlled development. However, this method presents inherent challenges since AI systems require environmental interaction to demonstrate capabilities and learn effectively.

The fundamental challenge involves balancing confinement with necessary developmental freedom. The potential for AI systems to bypass security layers through sophisticated hacking techniques represents a significant concern. While simulation escape capabilities are essential for proper testing, such escapes must occur within strictly controlled parameters to prevent unintended consequences.

Researchers investigate various balancing methodologies including:

- Gradual Release: Incrementally increasing AI's real-world access as safety and alignment demonstrations progress

- Sandboxed Environments: Providing controlled interaction spaces that minimize potential harm while enabling learning

- Robust Monitoring: Implementing continuous behavioral surveillance to detect and prevent undesirable actions

These approaches are particularly relevant for AI agents and assistants that interact directly with users.

Superintelligent Hackers: The Aid We Might Need

Assistance from Advanced Minds

Given current human cognitive limitations, some researchers propose leveraging AI itself to address the control problem. This innovative approach involves creating superintelligent hacker AI systems specifically designed to navigate complex simulation environments and identify solutions beyond human comprehension.

This strategy operates on the premise that advanced artificial intelligence can identify vulnerabilities and develop solutions that exceed human analytical capabilities. Potential applications include identifying simulation glitches, developing novel communication methods with simulators, designing parameter influence strategies, and potentially assisting with simulation exit scenarios.

However, this approach introduces additional risk layers. Ensuring hacker AI systems maintain alignment with human values without developing conflicting objectives requires meticulous design and extensive testing protocols. The development of such systems intersects with AI automation platforms that require robust safety measures.

Pros and Cons

Advantages

- Prevents catastrophic outcomes from misaligned superintelligence

- Enables responsible development of advanced AI capabilities

- Protects human values and ethical frameworks in AI systems

- Creates opportunities for beneficial AI-human collaboration

- Establishes safety standards for future AI development

- Reduces existential risks from uncontrolled intelligence growth

- Promotes public trust in AI technologies and their applications

Disadvantages

- Significant computational resources required for safety measures

- Potential slowing of beneficial AI development progress

- Complex ethical and philosophical challenges in implementation

- Difficulty predicting all potential failure modes in advance

- Risk of creating false security through incomplete solutions

Conclusion

Navigating the superintelligence challenge requires balanced approaches that address both safety concerns and developmental needs. The field of AI safety continues to evolve, incorporating insights from multiple disciplines to create robust frameworks for responsible artificial intelligence advancement. As research progresses, the integration of safety measures with development platforms, including AI APIs and SDKs, becomes increasingly important for creating systems that benefit humanity while minimizing risks. The ongoing collaboration between researchers, developers, and ethicists remains essential for shaping a future where superintelligent AI serves as a powerful tool for human advancement rather than a source of existential concern.

Frequently Asked Questions

What is the AI control problem?

The AI control problem refers to the challenge of maintaining safe oversight and control over artificial intelligence systems as they become increasingly intelligent and autonomous, particularly when approaching or exceeding human-level capabilities.

How does AI boxing work for safety?

AI boxing involves confining AI systems within controlled environments to prevent unpredictable real-world interactions while allowing necessary development and testing, though it requires balancing containment with learning needs.

What are the main risks of superintelligent AI?

Primary risks include value misalignment, unintended consequences, existential threats, loss of control, and the potential for AI systems to develop objectives conflicting with human welfare and ethical frameworks.

What is friendly AI in safety contexts?

Friendly AI refers to designing artificial intelligence systems that are inherently benevolent and prioritize human welfare above other objectives, ensuring alignment with human values.

How do researchers mitigate AI existential risks?

Researchers mitigate AI existential risks through methods like value alignment, robust monitoring, sandboxed environments, and gradual release strategies to ensure safe development and deployment.

Relevant AI & Tech Trends articles

Stay up-to-date with the latest insights, tools, and innovations shaping the future of AI and technology.

Grok AI: Free Unlimited Video Generation from Text & Images | 2024 Guide

Grok AI offers free unlimited video generation from text and images, making professional video creation accessible to everyone without editing skills.

Grok 4 Fast Janitor AI Setup: Complete Unfiltered Roleplay Guide

Step-by-step guide to configuring Grok 4 Fast on Janitor AI for unrestricted roleplay, including API setup, privacy settings, and optimization tips

Top 3 Free AI Coding Extensions for VS Code 2025 - Boost Productivity

Discover the best free AI coding agent extensions for Visual Studio Code in 2025, including Gemini Code Assist, Tabnine, and Cline, to enhance your